Flexible MFVI using mixtures of non-overlapping exponential families ( NeurIPS 2020 )

Abstract

Sparse model : perform…

- 1) automatic variable selection

- 2) aid interpretability

- 3) provide regularization

analytically obtaining a posterior distribution over the parameters of interest is intractable

MFVI (Mean Field Variational Inference)

- simple and popular framework

- often amenable to “analytically” deriving closed-form

- but….fail to produce sensible results for models with sparsity-inducing priors (ex. spike-and-slab)

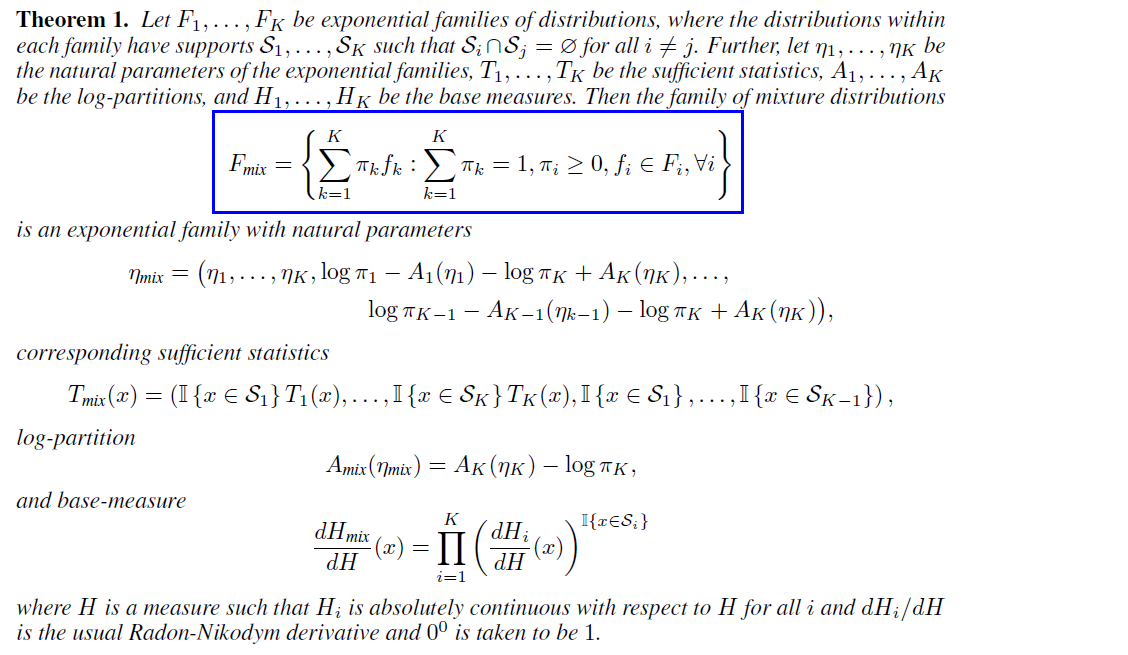

Mixture of exponential family distns with non-overlapping support form an exponential family

1. Introduction

Variational Inference

-

avoids sampling, rather fits an approximate posterior

- usually, use reverse KL-div (\(KL(Q \mid \mid P)\) )

- simple variational families

- (pros) more computationally tractable

- (cons) large approximation error

- MFVI : computationally efficient

Many approaches have been developed

- coupled hidden Markov models

- more expressive variational famililes

- an approach called, structured VI

- black-box VI

- seeks to automatically derive gradient estimators

- combining DL with Bayesian graphical models

2. More flexible exponential families

mixtures of distributions from an exponential family no longer form an exponential family.

BUT, not in the case of distinct support!

But, the above are of little use in MFVI, unless they are conjugate to widely used likelihood models.

\(\rightarrow\) how to create a model with “(1) more flexible” **prior, while maintaining **“(2) conjugacy”

Meaning?

-

[ Interpretation 1 ]

given conjugate prior ( say \(P\) ), we can create a more flexible prior that maintains conjugacy, by combining non-overlapping component distn

-

[ Interpretation 2 ]

if each component exponential family is conjugate to a distn & distns in different component families have non-overlapping support, then mixture distn form a conjugate exponential family