Meta-Transformer: A Unified Framework for Multimodal Learning

Zhang, Yiyuan, et al. "Meta-transformer: A unified framework for multimodal learning." arXiv preprint arXiv:2307.10802 (2023).

참고:

- https://aipapersacademy.com/meta-transformer/

- https://arxiv.org/pdf/2307.10802

Contents

- Introduction

- Architecture

- Unified Multimodal Transformer Pretraining

- Experiments

1. Introduction

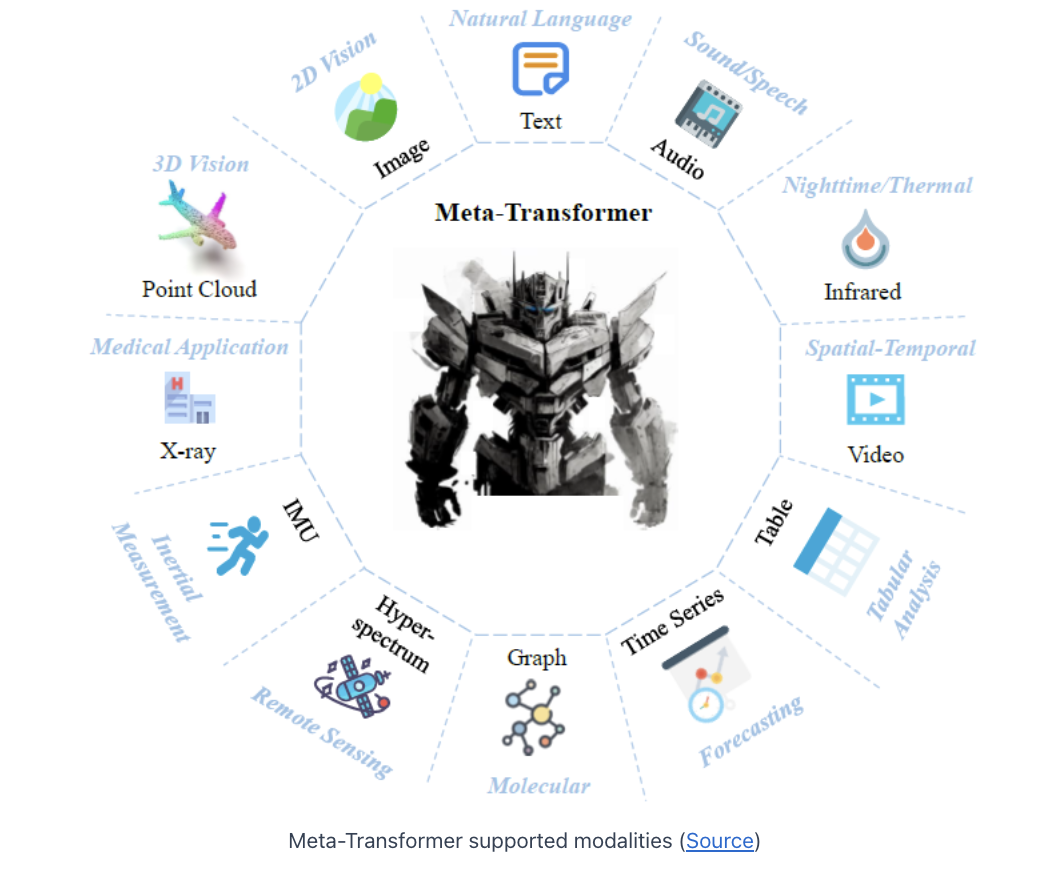

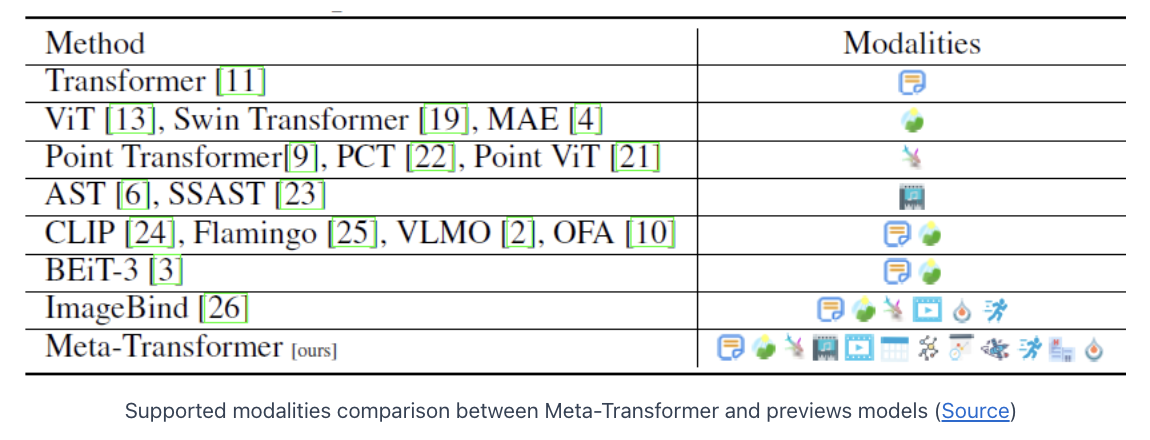

Meta-Transformer

- Multimodal learning

- Process information from 12 different modalities

Challenges: Each data modality is structured differently

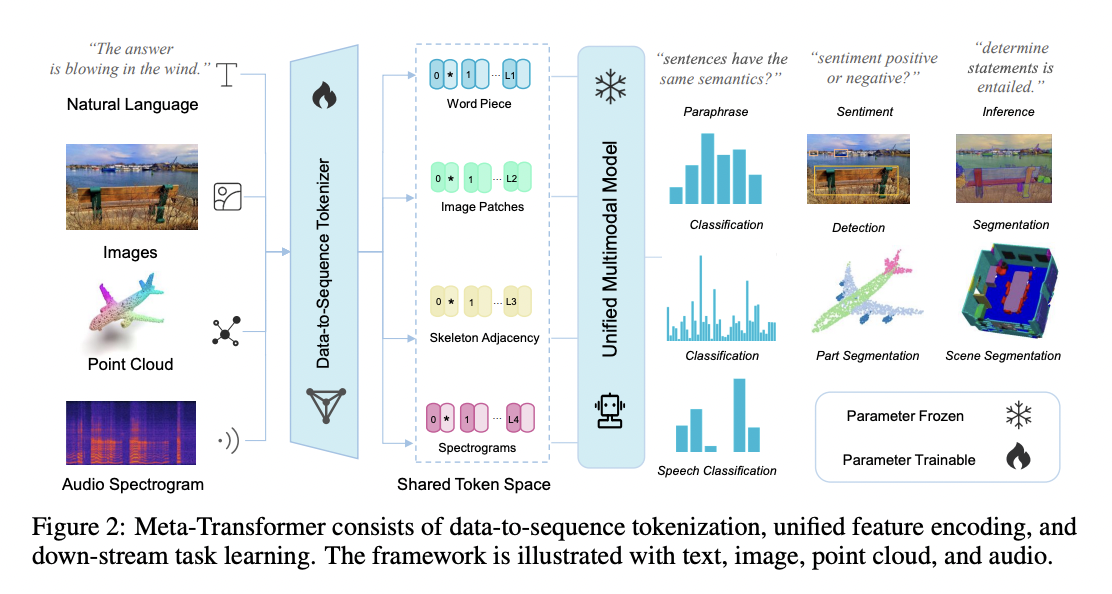

2. Architecture

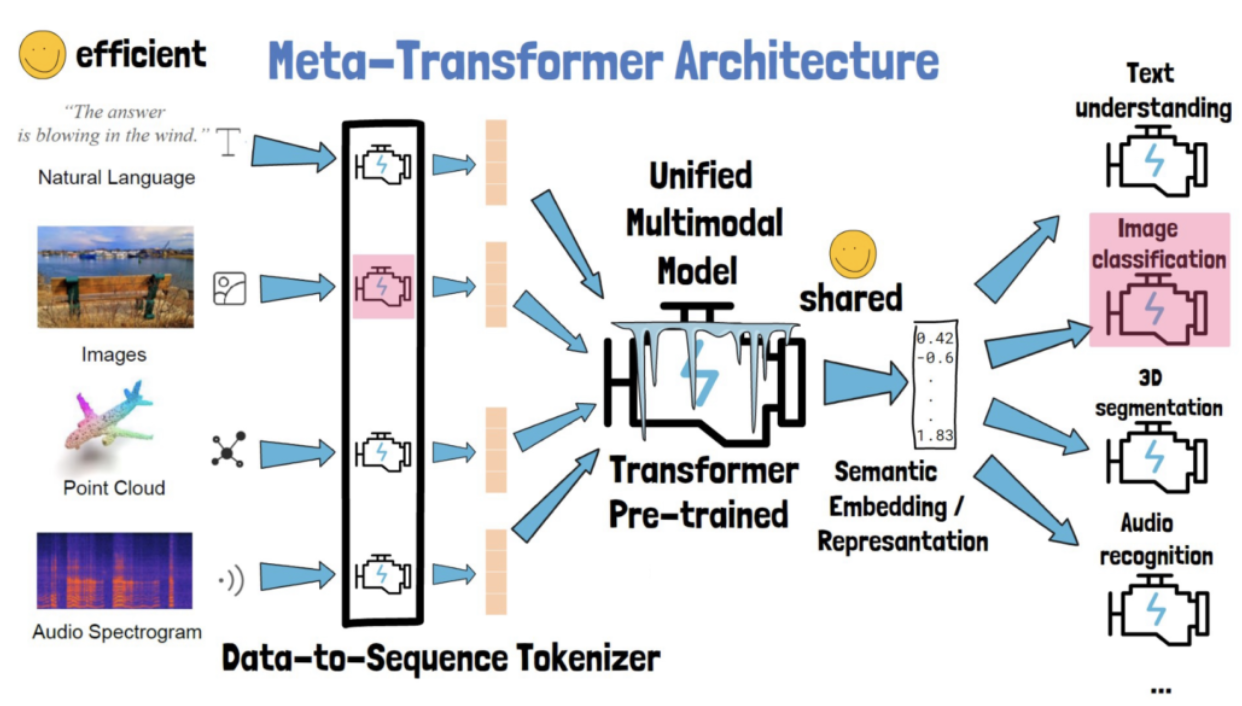

Goal: Produce embedding for any modality

- Can process different modalities as inputs

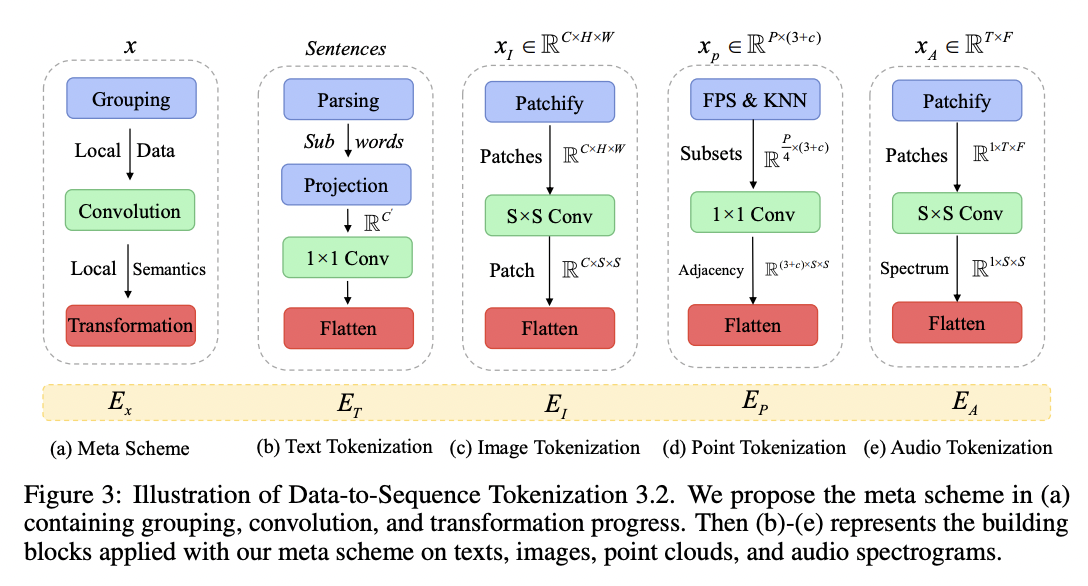

How can the transformer process information from different types of data?

\(\rightarrow\) Data-to-sequence tokenizer

- Consists of small tokenizers (per modality)

Task-specific head

- To solve various tasks with the obtained representation

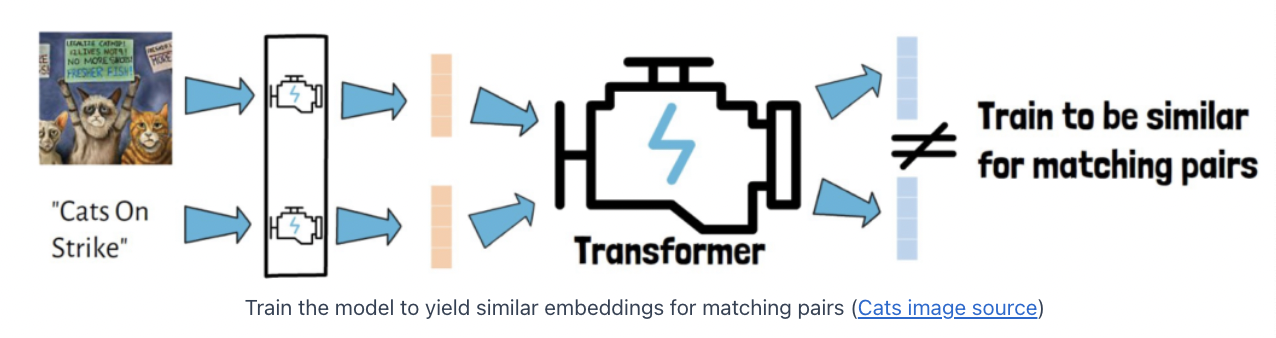

3. Unified Multimodal Transformer Pretraining

( The paper does not share a lot of information about the pre-training process )

Dataset: LAION-2B dataset

- (Text,Image) pair dataset

Task: Contrastive learning

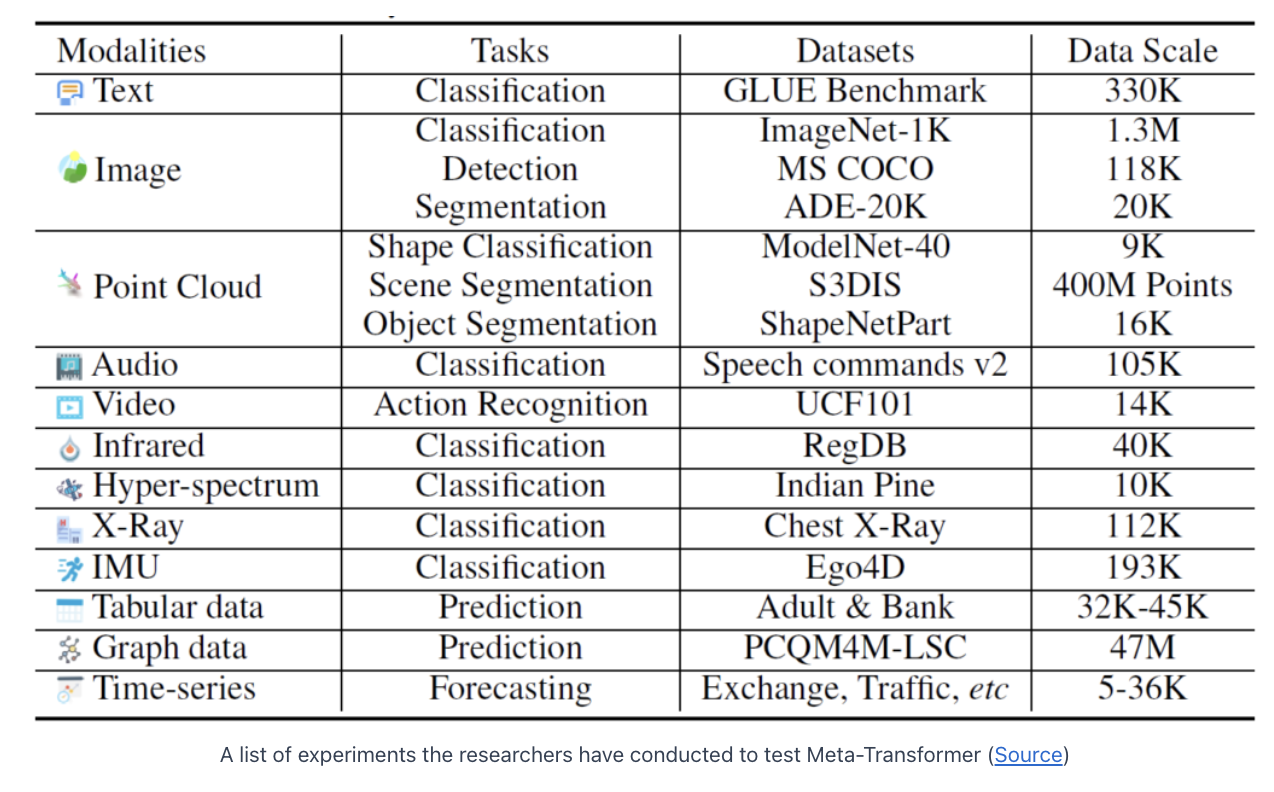

4. Experiments

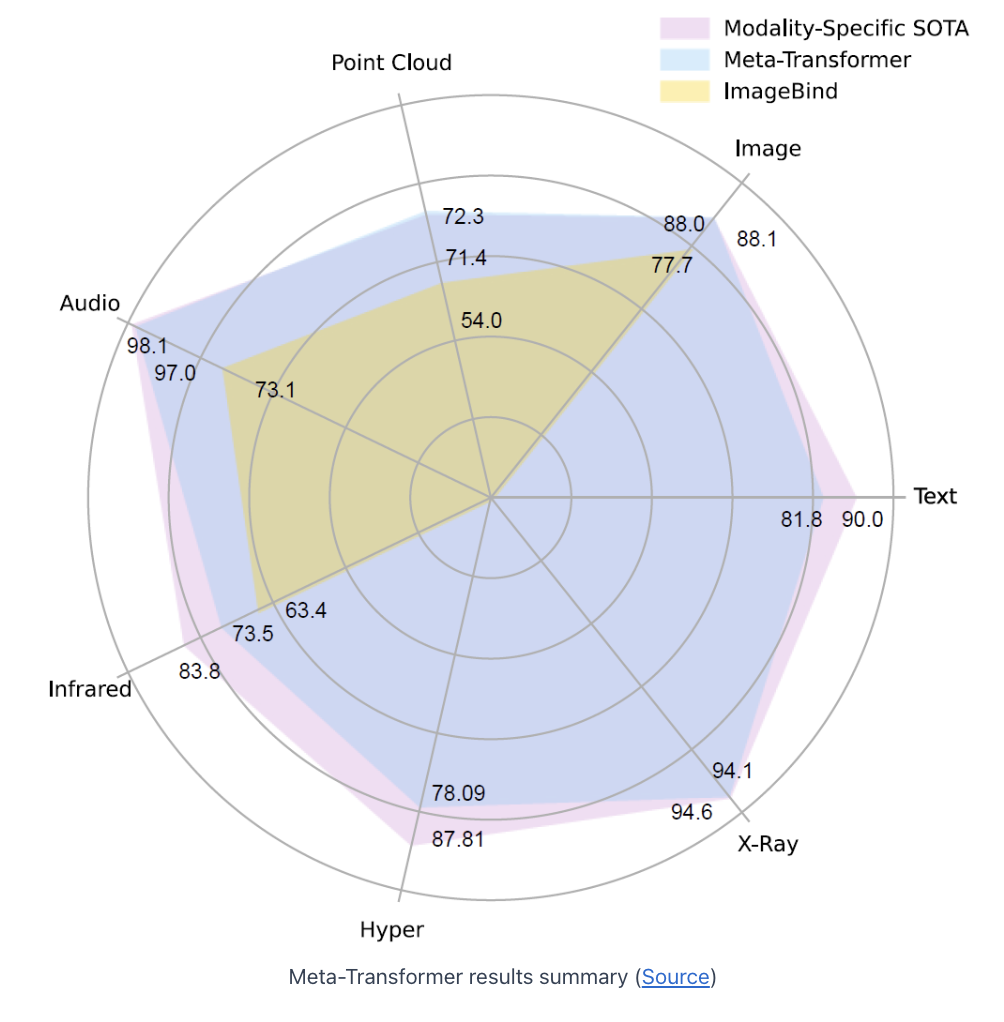

(1) Overall Performance

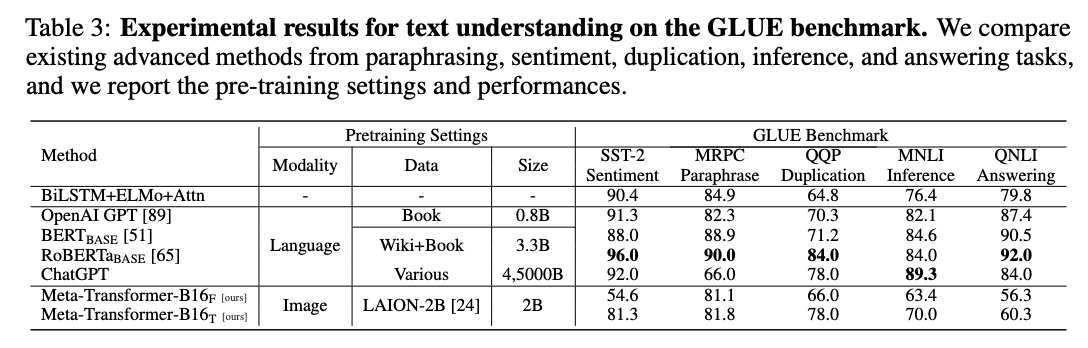

(2) Text

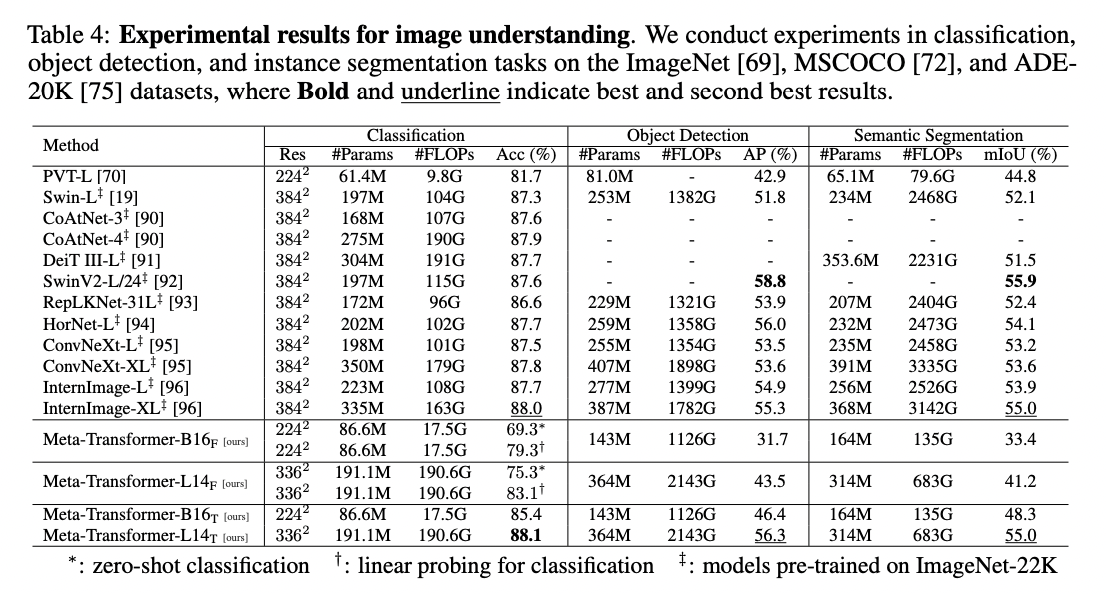

(3) Image