Transfer Learning for Time Series Classification

Contents

- Abstract

- Method

- Architecture

- Network Adaptation

- Inter-datsaet simliarity

0. Abstract

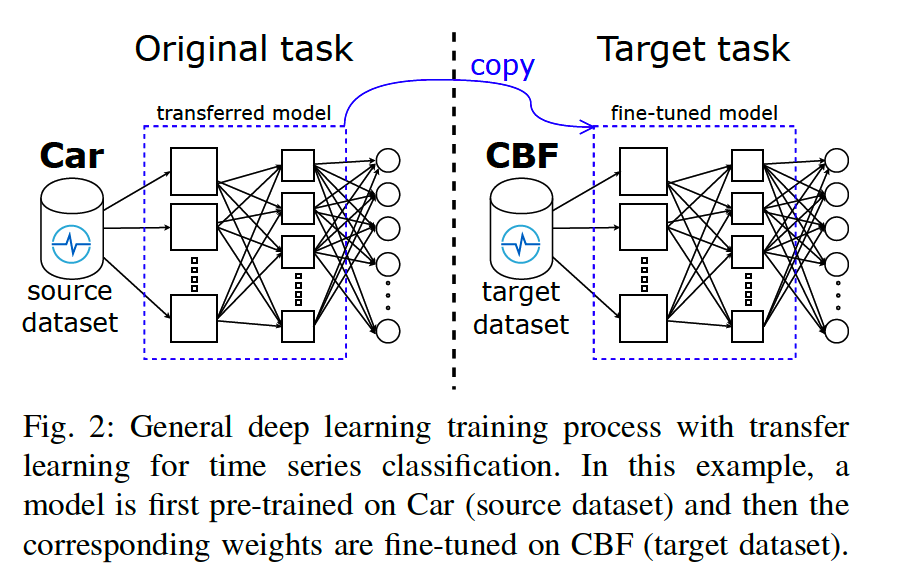

how to transfer deep CNNs for the TSC task

- Retrain a model & fine tune with other datasets

- total 85 datasets

1. Method

Notation

- TS : \(X=\left[x_1, x_2, \ldots x_T\right]\)

- dataset : \(D=\left\{\left(X_1, Y_1\right), \ldots,\left(X_N, Y_N\right)\right\}\)

- adopted NN

- how we adapt the network for TL process

- DTW based method to compute inter-dataset similarities

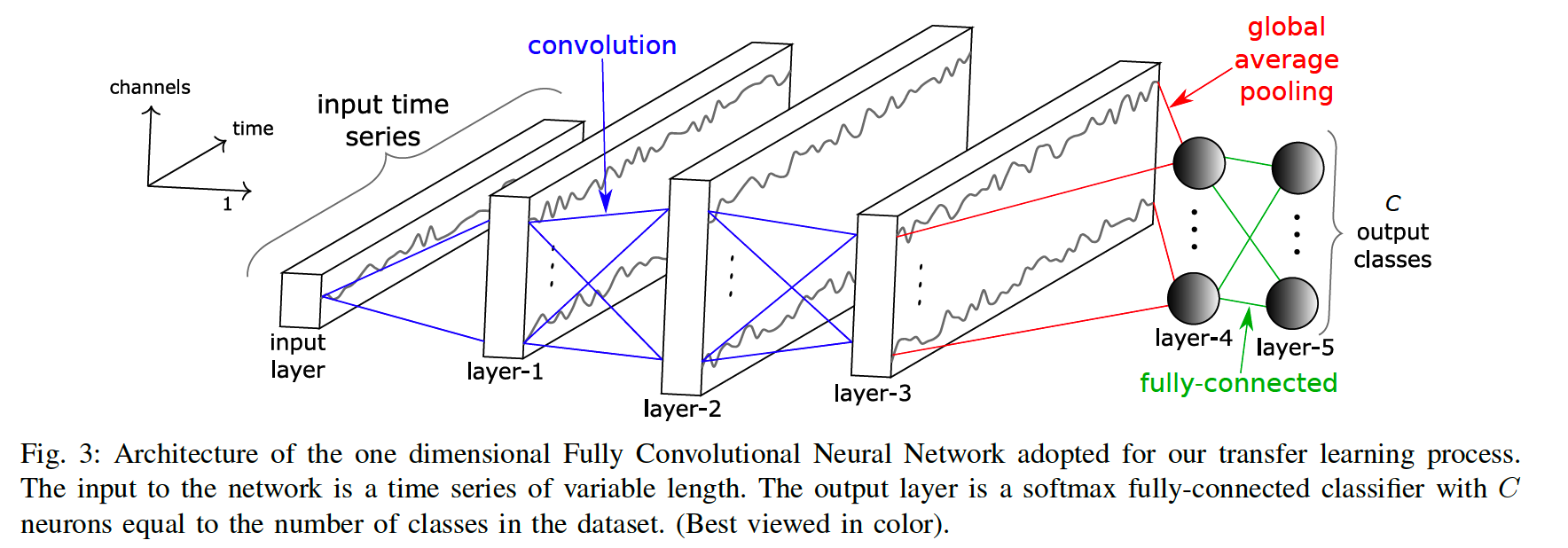

(1) Architecture

- 1d FCN (Fully Convolutional NN)

- model input & output

- input : TS of variable length

- output : probability distn over \(C\) classes

(2) Network Adaptation

Procedure

-

step 1) train NN on \(D_S\)

-

step 2) remove the last layer

-

step 3) add new softmax layer

-

step 4) retrain (fine-tune) using \(D_T\)

Advantages of using a GAP layer

- do not need to re-scale the input TS, when tansferring models between TS of different length

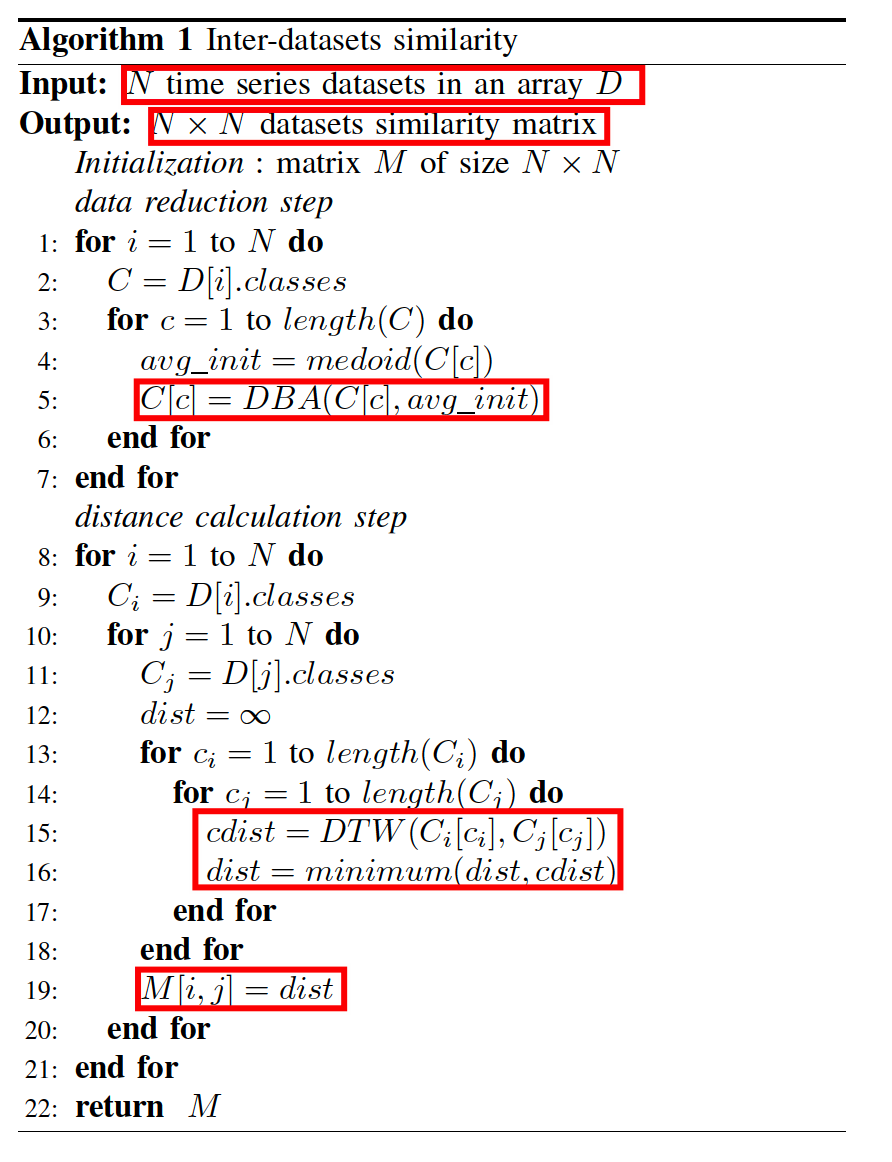

(3) Inter-datsaet simliarity

challenge : which to choose as a source dataset ??

( Total 85 datasets… 1 target domain & 84 possible source domain )

\(\rightarrow\) propose to use DTW distance to compute simliarities between datasets

Step 1) reduce the number of TS for each dataset to 1 TS per class ( = prototype )

- computed by averaging the set of TS in the certian class

- use DTW Barycenter Averaging (DBA) method

Step 2) calculate distance

-

distance between 2 datasets

= minimum distance between the prototypes of their corresponding classes