class ResidualUnit(tf.keras.Model):

def __init__(self, filter_in, filter_out, kernel_size):

super(ResidualUnit, self).__init__()

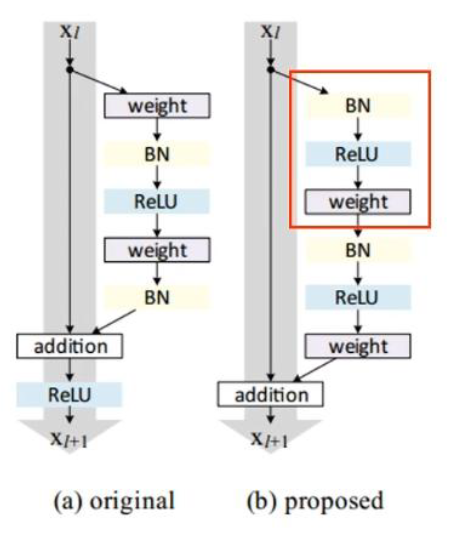

self.bn1 = tf.keras.layers.BatchNormalization() # 1) Batch Norm Layer

self.conv1 = tf.keras.layers.Conv2D(filter_out, kernel_size, padding='same') # 2) Convolutional Layer

self.bn2 = tf.keras.layers.BatchNormalization()

self.conv2 = tf.keras.layers.Conv2D(filter_out, kernel_size, padding='same')

# size가 다를 경우 대비해서!

if filter_in == filter_out:

self.identity = lambda x: x

else:

self.identity = tf.keras.layers.Conv2D(filter_out, (1,1), padding='same')

def call(self, x, training=False, mask=None):

h = self.bn1(x, training=training)

h = tf.nn.relu(h)

h = self.conv1(h)

h = self.bn2(h, training=training)

h = tf.nn.relu(h)

h = self.conv2(h)

return self.identity(x) + h

class ResnetLayer(tf.keras.Model):

def __init__(self, filter_in, filters, kernel_size):

super(ResnetLayer, self).__init__()

self.sequence = list() # 이 list에 여러 개의 Unit들이 담기게 된다

for f_in, f_out in zip([filter_in] + list(filters), filters): # ex. zip([8,16,16],[16,16])

self.sequence.append(ResidualUnit(f_in, f_out, kernel_size))

def call(self, x, training=False, mask=None):

for unit in self.sequence:

x = unit(x, training=training)

return x

class ResNet(tf.keras.Model):

def __init__(self):

super(ResNet, self).__init__() # ex. input : 28*28*n

self.conv1 = tf.keras.layers.Conv2D(8, (3, 3), padding='same', activation='relu') # 28x28x8

self.res1 = ResnetLayer(8, (16, 16), (3, 3)) # 28x28x16

self.pool1 = tf.keras.layers.MaxPool2D((2, 2)) # 14x14x16

self.res2 = ResnetLayer(16, (32, 32), (3, 3)) # 14x14x32

self.pool2 = tf.keras.layers.MaxPool2D((2, 2)) # 7x7x32

self.res3 = ResnetLayer(32, (64, 64), (3, 3)) # 7x7x64

self.flatten = tf.keras.layers.Flatten() # 3136

self.dense1 = tf.keras.layers.Dense(128, activation='relu') # 128

self.dense2 = tf.keras.layers.Dense(10, activation='softmax') # 10 ( classify to number 0 ~ 9)

def call(self, x, training=False, mask=None):

x = self.conv1(x)

x = self.res1(x, training=training)

x = self.pool1(x)

x = self.res2(x, training=training)

x = self.pool2(x)

x = self.res3(x, training=training)

x = self.flatten(x)

x = self.dense1(x)

return self.dense2(x)

# Implement training loop

@tf.function

def train_step(model, images, labels, loss_object, optimizer, train_loss, train_accuracy):

with tf.GradientTape() as tape: # 자동 미분 & 연산 기록해줌

predictions = model(images, training=True) # (1) prediction result

loss = loss_object(labels, predictions) # (2) calculate loss

gradients = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(gradients, model.trainable_variables))

train_loss(loss)

train_accuracy(labels, predictions)

# Implement algorithm test

@tf.function

def test_step(model, images, labels, loss_object, test_loss, test_accuracy):

predictions = model(images, training=False)

t_loss = loss_object(labels, predictions)

test_loss(t_loss)

test_accuracy(labels, predictions)

mnist = tf.keras.datasets.mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

x_train = x_train[..., tf.newaxis].astype(np.float32)

x_test = x_test[..., tf.newaxis].astype(np.float32)

train_ds = tf.data.Dataset.from_tensor_slices((x_train, y_train)).shuffle(10000).batch(32)

test_ds = tf.data.Dataset.from_tensor_slices((x_test, y_test)).batch(32)

for epoch in range(EPOCHS):

for images, labels in train_ds:

train_step(model, images, labels, loss_object, optimizer, train_loss, train_accuracy)

for test_images, test_labels in test_ds:

test_step(model, test_images, test_labels, loss_object, test_loss, test_accuracy)

template = 'Epoch {}, Loss: {}, Accuracy: {}, Test Loss: {}, Test Accuracy: {}'

print(template.format(epoch + 1,

train_loss.result(),

train_accuracy.result() * 100,

test_loss.result(),

test_accuracy.result() * 100))

train_loss.reset_states()

train_accuracy.reset_states()

test_loss.reset_states()

test_accuracy.reset_states()

Epoch 1, Loss: 0.1362374722957611, Accuracy: 96.26166534423828, Test Loss: 0.043587375432252884, Test Accuracy: 98.6500015258789

Epoch 2, Loss: 0.09931698441505432, Accuracy: 97.2874984741211, Test Loss: 0.046643007546663284, Test Accuracy: 98.68999481201172

Epoch 3, Loss: 0.0823485255241394, Accuracy: 97.7411117553711, Test Loss: 0.044813815504312515, Test Accuracy: 98.79666900634766

Epoch 4, Loss: 0.07157806307077408, Accuracy: 98.02749633789062, Test Loss: 0.04259391874074936, Test Accuracy: 98.84249877929688

Epoch 5, Loss: 0.06405625492334366, Accuracy: 98.22633361816406, Test Loss: 0.04102792590856552, Test Accuracy: 98.85600280761719

Epoch 6, Loss: 0.05831533297896385, Accuracy: 98.37750244140625, Test Loss: 0.04298485442996025, Test Accuracy: 98.81666564941406

Epoch 7, Loss: 0.05337631702423096, Accuracy: 98.5088119506836, Test Loss: 0.04212458059191704, Test Accuracy: 98.83428955078125

Epoch 8, Loss: 0.04950246587395668, Accuracy: 98.61895751953125, Test Loss: 0.04147474840283394, Test Accuracy: 98.85625457763672

Epoch 9, Loss: 0.04653759300708771, Accuracy: 98.70240783691406, Test Loss: 0.040949009358882904, Test Accuracy: 98.87777709960938

Epoch 10, Loss: 0.044119786471128464, Accuracy: 98.77149963378906, Test Loss: 0.04024982452392578, Test Accuracy: 98.91099548339844