6.Text Understanding with the Attention Sum Reader Network (2016)

목차

- Abstract

- Introduction

- Task and Dataset

- Formal Task Description

- Datasets

- Our Model - Attention Sum Reader

- Formal Description

- Model Instance Details

- Related Works

Abstract

Trend : use DL to cloze-style context-question

This paper presents “new, simple model” that uses “attention” to “directly” pick the answer from the context

1. Introduction

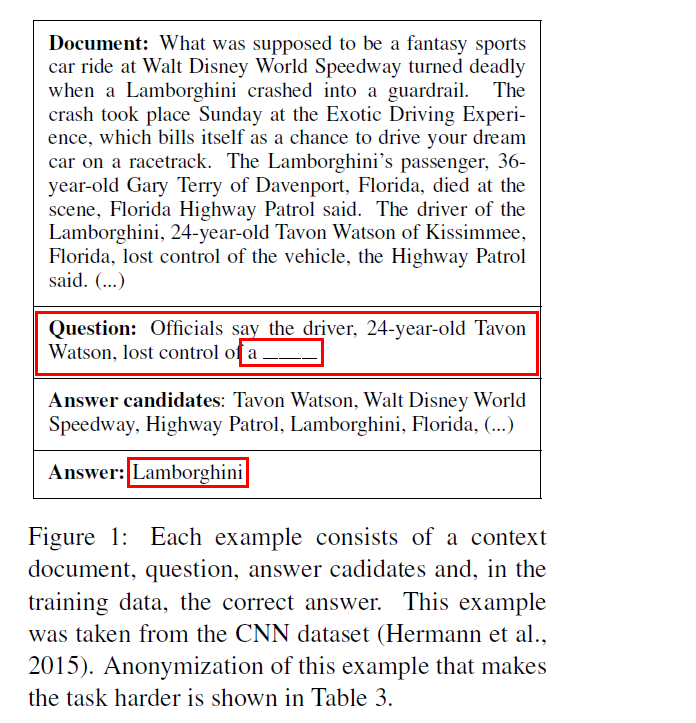

Cloze style question

-

questions formed by “removing a phrase” from a sentence

-

one way to alter the task difficulty : vary the word type being replaced

-

as opposed to selecting a random sentence from a text, questions can be formed from a specific part of a document ( ex. short summary )

-

example )

2. Task and Dataset

2-1. Formal Task Description

training data : \((\mathbf{q}, \mathbf{d}, a, A)\)

- \(\mathbf{q}\) : question

- \(\mathbf{d}\) : document

- \(A\) : set of possible answers & \(a \in A\).

2-2. Datasets

1) News Articles : CNN and Daily Mail

2) Children’s Book test

3. Our Model - Attention Sum Reader

Structured as follows :

-

1) compute vector embedding of QUERY

-

2) compute vector embedding of WORD , in the context of whole document

( =”contextual embedding “)

-

3) dot product between 1) & 2) \(\rightarrow\) select the most likely answer

3-1. Formal Description

structure

-

1 embedding function ( \(e\) )

-

2 encoder functions ( \(f\) & \(g\) )

-

\(f\) : DOCUMENT encoder ( = implements “contextual embedding “)

( \(f_i({\mathbf{d}})\) : contextual embedding of the \(i\)-th word )

-

\(g\) : QUERY encoder

( translate the query \(\mathbf{q}\) into a fixed length representation, same dimension as \(f_i({\mathbf{d}}))\)

-

-

compute the “weight” for every word as “dot product”

-

Softmax Function

-

model probability \(s_i\)

-

answer to query \(\mathbf{q}\) appears at position \(i\) in the document \(\mathbf{d}\) :

\(s_{i} \propto \exp \left(f_{i}(\mathbf{d}) \cdot g(\mathbf{q})\right)\).

-

-

Probability that word \(w\) is the correct answer : \(P(w \mid \mathbf{q}, \mathbf{d}) \propto \sum_{i \in I(w, \mathbf{d})} s_{i}\).

( where \(I(w, \mathbf{d})\) : set of positions where \(w\) appears in the document \(\mathbf{d}\) )

3-2. Model Instance Details

(1) Document encoder, \(f\)

- biGRU

- \(f_{i}(\mathbf{d})=\overrightarrow{f_{i}}(\mathbf{d}) \mid \mid \overleftarrow{f_{i}}(\mathbf{d})\). ( \(\mid \mid\) : vector concatenation )

(2) Query encoder, \(q\)

- biGRU

- \(g(\mathbf{q})=\overline{g_{\mid \mathbf{q} \mid }}(\mathbf{q}) \mid \mid \overleftarrow{g_{1}}(\mathbf{q})\).

(3) Word Embedding function, \(e\)

- look-up table \(V\)….. \(e(w)=V_{w}, w \in V\)

- each row of \(V\) contains embedding of one word from the vocabulary

During training, we jointly optimize \(g\), \(q\), \(e\)

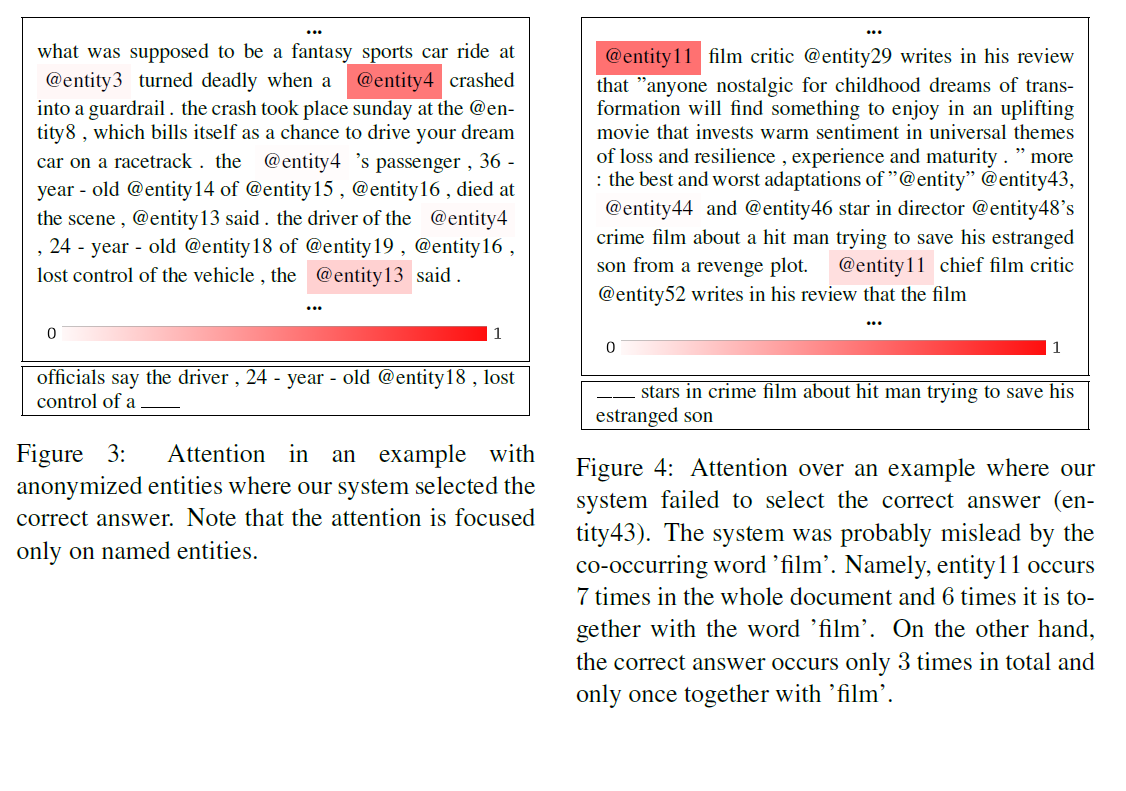

Result

4. Related Work

recent DNNs are applied to the task of “text comprehension”

\(\rightarrow\) mostly uses “attention mechanism”

- Attentive and Impatient Readers

- Chen et al. 2016

- Memory Networks

- Dynamic Entity Representation

- Pointer Networks

Summary

-

this model combines the best features of architectures above

-

1) use RNN to “read” the document & query

2) use attention

3) use summation of attention weights

-

use the attention “DIRECTLY” to compute the answer probability