[ CS224W - Colab 2 ]

( 참고 : CS224W: Machine Learning with Graphs )

( 참고 : https://github.com/luciusssss/CS224W-Colab/blob/main/CS224W-Colab%202.ipynb )

PyTorch Geometric generally has two classes

torch_geometric.datasets

- variety of common graph datasets

torch_geometric.data

- provides the data handling of graphs in PyTorch tensors.

[ PyG Datasets ]

torch_geometric.datasets

from torch_geometric.datasets import TUDataset

root = './enzymes'

name = 'ENZYMES'

pyg_dataset= TUDataset(root,name)

1. ENZYMES dataset 소개

- number of classes

- number of features

number_of_classes = pyg_dataset.num_classes # 6

number_of_features = pyg_dataset.num_features # 3

2. Label of Graph

- 특정 index의 graph의 label은?

- 결과 : 100번 그래프의 label은 4

idx=100

pyg_dataset[idx].y[0] # 4

3. Number of Edges

- 특정 index의 graph의 edge 개수는?

idx=200

pyg_dataset[idx].edge_index.shape[1]

[ Open Graph Benchmark (OGB) ]

collection of realistic, large-scale, and diverse benchmark datasets for graph

( OGB also supports the PyG dataset and data )

Dataset 다운받기

- OGB 내에 있는

ogbn-arxiv데이터셋

import torch_geometric.transforms as T

from ogb.nodeproppred import PygNodePropPredDataset

dataset_name = 'ogbn-arxiv'

dataset = PygNodePropPredDataset(name=dataset_name,

transform=T.ToSparseTensor())

print(len(dataset)) # 1 ... 1개의 graph 가진 데이터셋

data = dataset[0]

4. Number of Features in ogbn-arxiv

data.num_features

[ GNN : Node Property Prediction ]

PyTorch Geometric을 사용하여 GNN을 만들 것

( for node classification )

- PyG의

GCNConv를 사용할 것

(1) Import Packages

import torch

import torch.nn.functional as F

from torch_geometric.nn import GCNConv

import torch_geometric.transforms as T

from ogb.nodeproppred import PygNodePropPredDataset, Evaluator

device = 'cuda' if torch.cuda.is_available() else 'cpu'

(2) Load & Preprocess Dataset

- Load data

dataset_name = 'ogbn-arxiv'

dataset = PygNodePropPredDataset(name=dataset_name,

transform=T.ToSparseTensor())

data = dataset[0]

- Preprocess data

# Adjacency Matrix를 symmetric하게

data.adj_t = data.adj_t.to_symmetric()

data = data.to(device)

# Train / Test index 나누기

split_idx = dataset.get_idx_split()

train_idx = split_idx['train'].to(device)

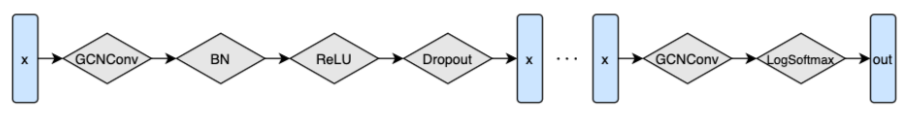

(3) Modeling ( GCN )

모델 구조 :

return_embeds

- True : embedding 결과 그 자체

- False : softmax 결과값

class GCN(torch.nn.Module):

def __init__(self, input_dim, hidden_dim, output_dim, num_layers,

dropout, return_embeds=False):

super(GCN, self).__init__()

# (1) GCN Conv layers

self.convs = torch.nn.ModuleList(

[GCNConv(in_channels=input_dim, out_channels=hidden_dim)] +

[GCNConv(in_channels=hidden_dim, out_channels=hidden_dim)

for i in range(num_layers-2)] +

[GCNConv(in_channels=hidden_dim, out_channels=output_dim)])

# (2) Batch Norm Layers

self.bns = torch.nn.ModuleList([

torch.nn.BatchNorm1d(num_features=hidden_dim)

for i in range(num_layers-1)])

self.softmax = torch.nn.LogSoftmax()

self.dropout = dropout

self.return_embeds = return_embeds

def reset_parameters(self):

for conv in self.convs:

conv.reset_parameters()

for bn in self.bns:

bn.reset_parameters()

def forward(self, x, adj_t):

for conv, bn in zip(self.convs[:-1], self.bns):

x1 = F.relu(bn(conv(x, adj_t)))

if self.training:

x1 = F.dropout(x1, p=self.dropout)

x = x1

x = self.convs[-1](x, adj_t)

if self.return_embeds:

return x

else:

return self.softmax(x)

(4) Train 함수

def train(model, data, train_idx, optimizer, loss_fn):

# (1) train 모드

model.train()

# (2) optimizer 초기화

optimizer.zero_grad()

# (3) output 계산 ( 모든 data )

y_hat = model(data.x, data.adj_t)

# (4) loss 계산 ( train data )

loss = loss_fn(y_hat[train_idx], data.y[train_idx].reshape(-1))

# (5) back-propagation

loss.backward()

optimizer.step()

return loss.item()

(5) Test 함수

@torch.no_grad()

def test(model, data, split_idx, evaluator):

# (1) test 모드

model.eval()

# (2) output 계산 ( 예측값 & 예측 class )

y_hat = model(data.x, data.adj_t)

y_pred = y_hat.argmax(dim=-1, keepdim=True)

# (3) train & val & test 데이터에 대한 accuracy

train_acc = evaluator.eval({

'y_true': data.y[split_idx['train']],

'y_pred': y_pred[split_idx['train']]})['acc']

valid_acc = evaluator.eval({

'y_true': data.y[split_idx['valid']],

'y_pred': y_pred[split_idx['valid']]})['acc']

test_acc = evaluator.eval({

'y_true': data.y[split_idx['test']],

'y_pred': y_pred[split_idx['test']]})['acc']

return train_acc, valid_acc, test_acc

(6) Arguments

args = {

'device': device,

'num_layers': 3,

'hidden_dim': 256,

'dropout': 0.5,

'lr': 0.01,

'epochs': 100,

}

(7) Build Model & Evaluator

model = GCN(data.num_features, args['hidden_dim'],

dataset.num_classes, args['num_layers'],

args['dropout']).to(device)

evaluator = Evaluator(name='ogbn-arxiv')

(8) Train model

import copy

# (1) paramter 초기화

model.reset_parameters()

# (2) optimizer & loss function 설정

optimizer = torch.optim.Adam(model.parameters(), lr=args['lr'])

loss_fn = F.nll_loss

# (3) 가장 성능 좋은 모델/정확도 기록

best_model = None

best_valid_acc = 0

# (4) 학습 시작

for epoch in range(1, 1 + args["epochs"]):

### Train

loss = train(model, data, train_idx, optimizer, loss_fn)

### Test

result = test(model, data, split_idx, evaluator)

### 결과 기록

train_acc, valid_acc, test_acc = result

if valid_acc > best_valid_acc:

best_valid_acc = valid_acc

best_model = copy.deepcopy(model)

print(f'Epoch: {epoch:02d}, '

f'Loss: {loss:.4f}, '

f'Train: {100 * train_acc:.2f}%, '

f'Valid: {100 * valid_acc:.2f}% '

f'Test: {100 * test_acc:.2f}%')

가장 좋은 성능을 냈던 model을 사용하여, 전체 데이터 결과 다시 예측하기

best_result = test(best_model, data, split_idx, evaluator)

train_acc, valid_acc, test_acc = best_result

print(f'Best model: '

f'Train: {100 * train_acc:.2f}%, '

f'Valid: {100 * valid_acc:.2f}% '

f'Test: {100 * test_acc:.2f}%')

[ GNN : Graph Property Prediction ]

이번엔, ogbg-molhiv 데이터 사용

(1) data 불러오기

from ogb.graphproppred import PygGraphPropPredDataset, Evaluator

from torch_geometric.data import DataLoader

from tqdm.notebook import tqdm

device = 'cuda' if torch.cuda.is_available() else 'cpu'

dataset = PygGraphPropPredDataset(name='ogbg-molhiv')

split_idx = dataset.get_idx_split()

(2) Data Loader 생성

train_loader = DataLoader(dataset[split_idx["train"]], batch_size=32,

shuffle=True, num_workers=0)

valid_loader = DataLoader(dataset[split_idx["valid"]], batch_size=32,

shuffle=False, num_workers=0)

test_loader = DataLoader(dataset[split_idx["test"]], batch_size=32,

shuffle=False, num_workers=0)

(3) Argument

args = {

'device': device,

'num_layers': 5,

'hidden_dim': 256,

'dropout': 0.5,

'lr': 0.001,

'epochs': 30,

}

(4) Graph Prediction Model

from ogb.graphproppred.mol_encoder import AtomEncoder

from torch_geometric.nn import global_add_pool, global_mean_pool

class GCN_Graph(torch.nn.Module):

def __init__(self, hidden_dim, output_dim, num_layers, dropout):

super(GCN_Graph, self).__init__()

self.node_encoder = AtomEncoder(hidden_dim)

self.gnn_node = GCN(hidden_dim, hidden_dim,

hidden_dim, num_layers, dropout, return_embeds=True)

self.pool = global_mean_pool

self.linear = torch.nn.Linear(hidden_dim, output_dim)

def reset_parameters(self):

self.gnn_node.reset_parameters()

self.linear.reset_parameters()

def forward(self, batched_data):

x, edge_index, batch = batched_data.x, batched_data.edge_index, batched_data.batch

# (1) 주어진 node를 hidden dim으로 임베딩

embed = self.node_encoder(x)

# (2) 임베딩된 node를 GCN 거치기

embed = self.gnn_node(embed, edge_index)

# (3) Pooling (GAP)

features = self.pool(embed, batch)

# (4) Linear Layer

out = self.linear(features)

return out

(5) Train 함수

def train(model, device, data_loader, optimizer, loss_fn):

# (1) train 모드

model.train()

# (2) iteration

for step, batch in enumerate(tqdm(data_loader, desc="Iteration")):

batch = batch.to(device)

if batch.x.shape[0] == 1 or batch.batch[-1] == 0:

pass

else:

is_labeled = batch.y == batch.y

# (3) optimizer 초기화

optimizer.zero_grad()

# (4) output 계산

y_hat = model(batch)

# (5) loss 계산

loss = loss_fn(y_hat[is_labeled], batch.y[is_labeled].float())

# (6) backpropagation

loss.backward()

optimizer.step()

return loss.item()

(6) Evaluation 함수

def eval(model, device, loader, evaluator):

model.eval()

y_true_list = []

y_pred_list = []

for step, batch in enumerate(tqdm(loader, desc="Iteration")):

batch = batch.to(device)

if batch.x.shape[0] == 1:

pass

else:

with torch.no_grad():

y_hat = model(batch)

y_true_list.append(batch.y.view(y_hat.shape).detach().cpu())

y_pred_list.append(y_hat.detach().cpu())

y_true_list = torch.cat(y_true_list, dim = 0).numpy()

y_pred_list = torch.cat(y_pred_list, dim = 0).numpy()

input_dict = {"y_true": y_true_list, "y_pred": y_pred_list}

eval_result = evaluator.eval(input_dict)

return eval_result

(7) Model

model = GCN_Graph(args['hidden_dim'],

dataset.num_tasks, args['num_layers'],

args['dropout']).to(device)

evaluator = Evaluator(name='ogbg-molhiv')