Transformers Can Do Arithmetic with the Right Embeddings

McLeish, Sean, et al. "Transformers Can Do Arithmetic with the Right Embeddings." NeurIPS 2024

참고:

- https://aipapersacademy.com/abacus-embeddings/

- https://arxiv.org/pdf/2405.17399

Contents

- Introduction

- Abacus Embedding

- Positional Embedding

- Abacus Embedding

- Experiments

1. Introduction

Remarkable success of LLMs

\(\rightarrow\) Still not good at complex multi-step and algorithmic reasoning

Common approach

- Focus on simple arithmetic problems (e.g., addition )

This paper = Remarkable progress in the arithmetic capabilities for transformers!

2. Abacus Embedding

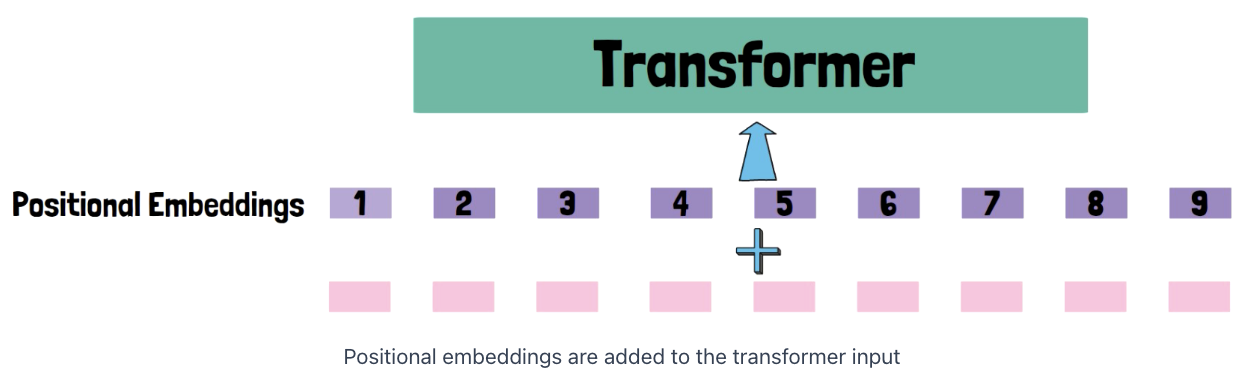

(1) Positional Embedding (PE)

Calculation (by humans) vs. PE

-

Calculation = Organize the digits in columns by the digit position in each number

-

PE = Do not embed the position of each digit in each number

( Rather just the position of the token in the whole sequence )

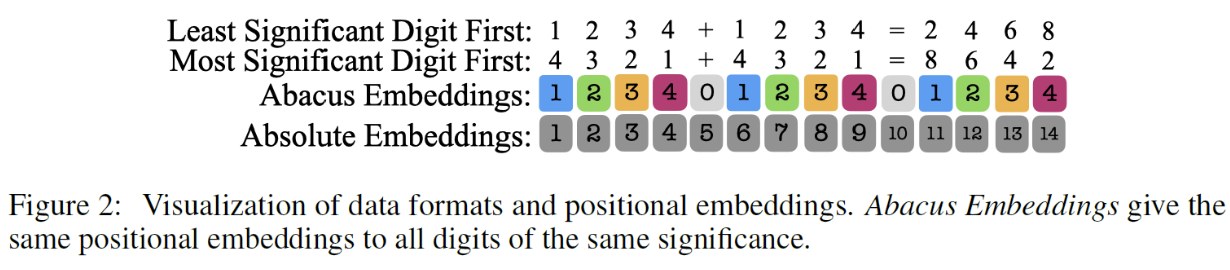

(2) Abacus Embedding

Solution: Use a new type of positional embeddings!

Abacus embeddings

- Provide the same value for all digits in the sequence of the same significance

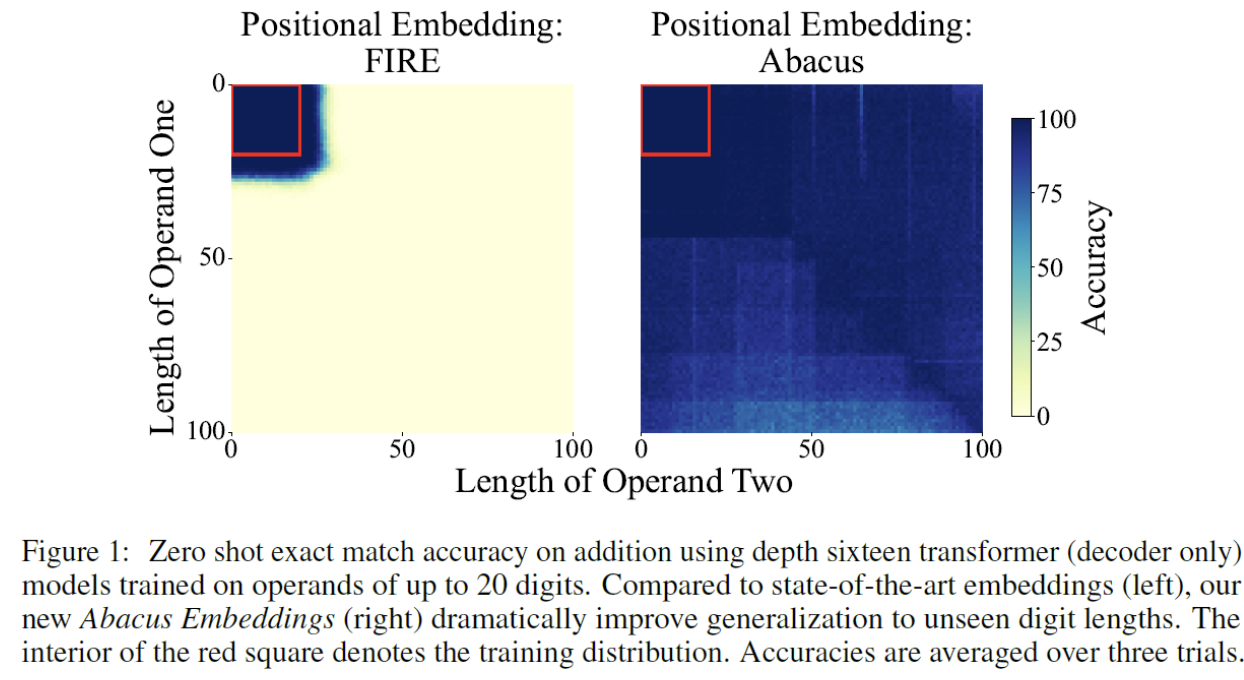

3. Experiments