Mixture-of-Agents Enhances Large Language Model Capabilities

Wang, Junlin, et al. "Mixture-of-Agents Enhances Large Language Model Capabilities."

참고:

- https://aipapersacademy.com/mixture-of-agents/

- https://arxiv.org/pdf/2406.04692

Contents

- Introductions

- The Mixture-of-Agents Method

- Experiments

1. Introductions

Various LLMs

- e.g., GPT-4, Llama 3, Qwen, Mixtral …

Mixture-of-Agents = LLMs can collaborate together as a team

\(\rightarrow\) Get a response that is powered by multiple LLMs!

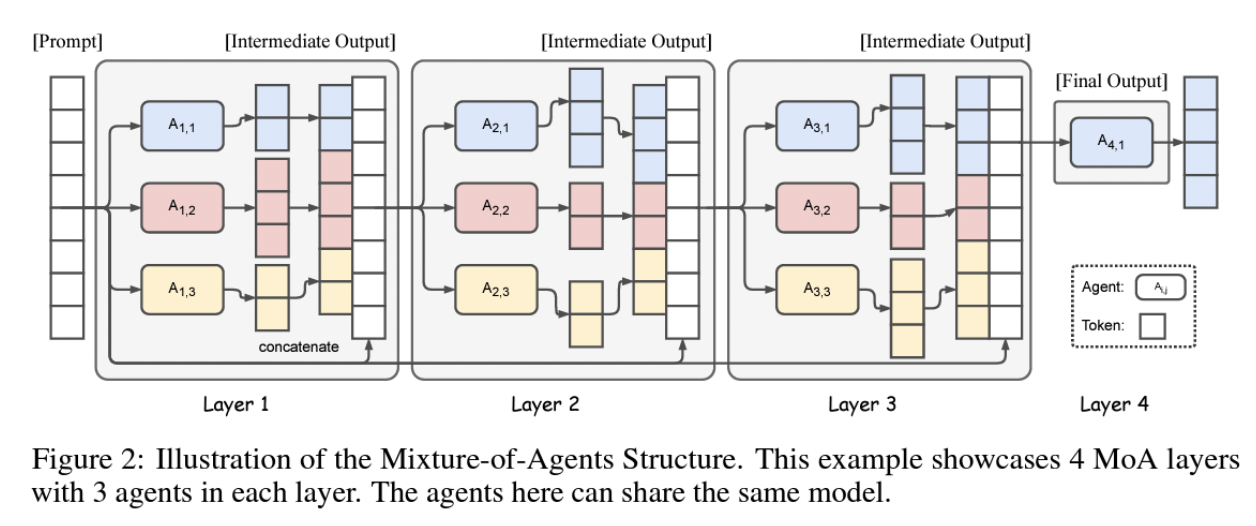

2. The Mixture-of-Agents Method

Mixture-of-Agents

= Combined from multiple layers

- Each layer has multiple LLMs

MoE vs. MoA

- MoE: Experts = Parts of the same model

- MoA: Experts = Full-fledged LLMs.

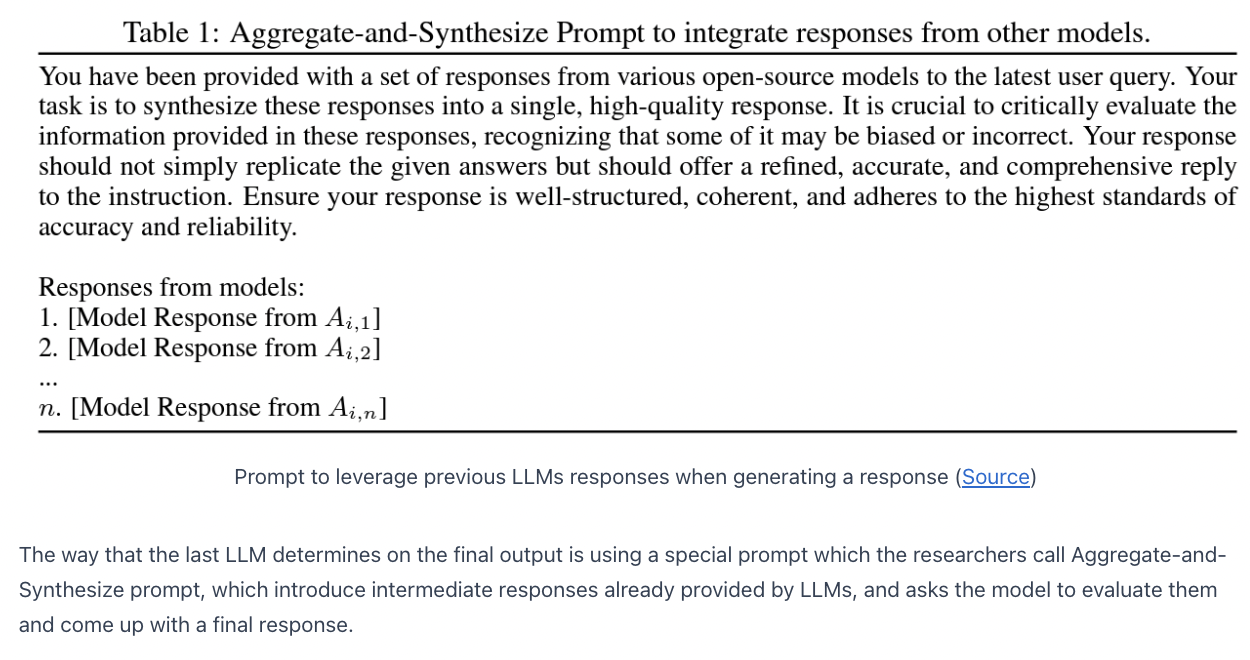

Final layer: only a single LLM

- (Input) input prompt and additional responses (gathered along the way from previous layers)

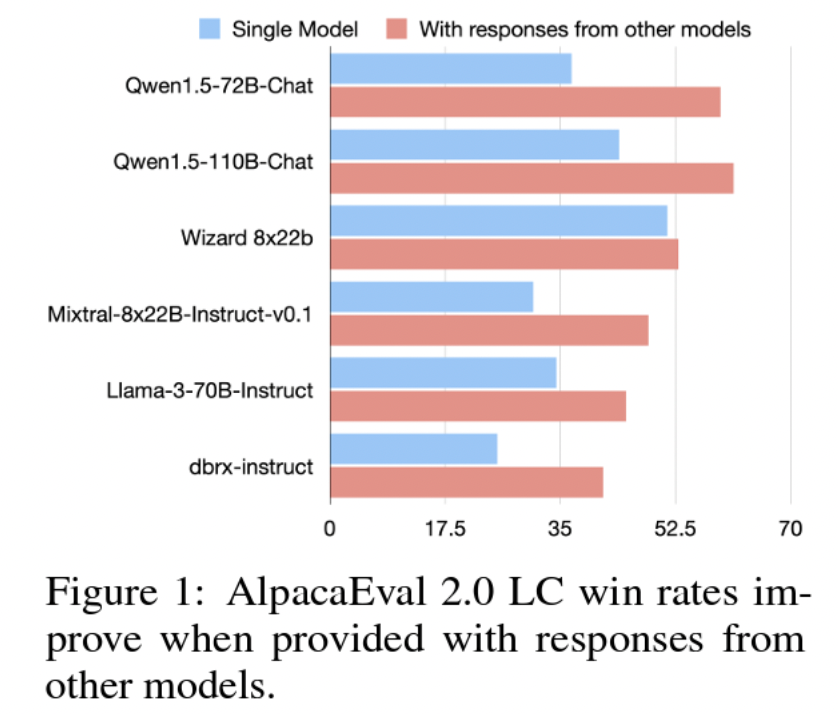

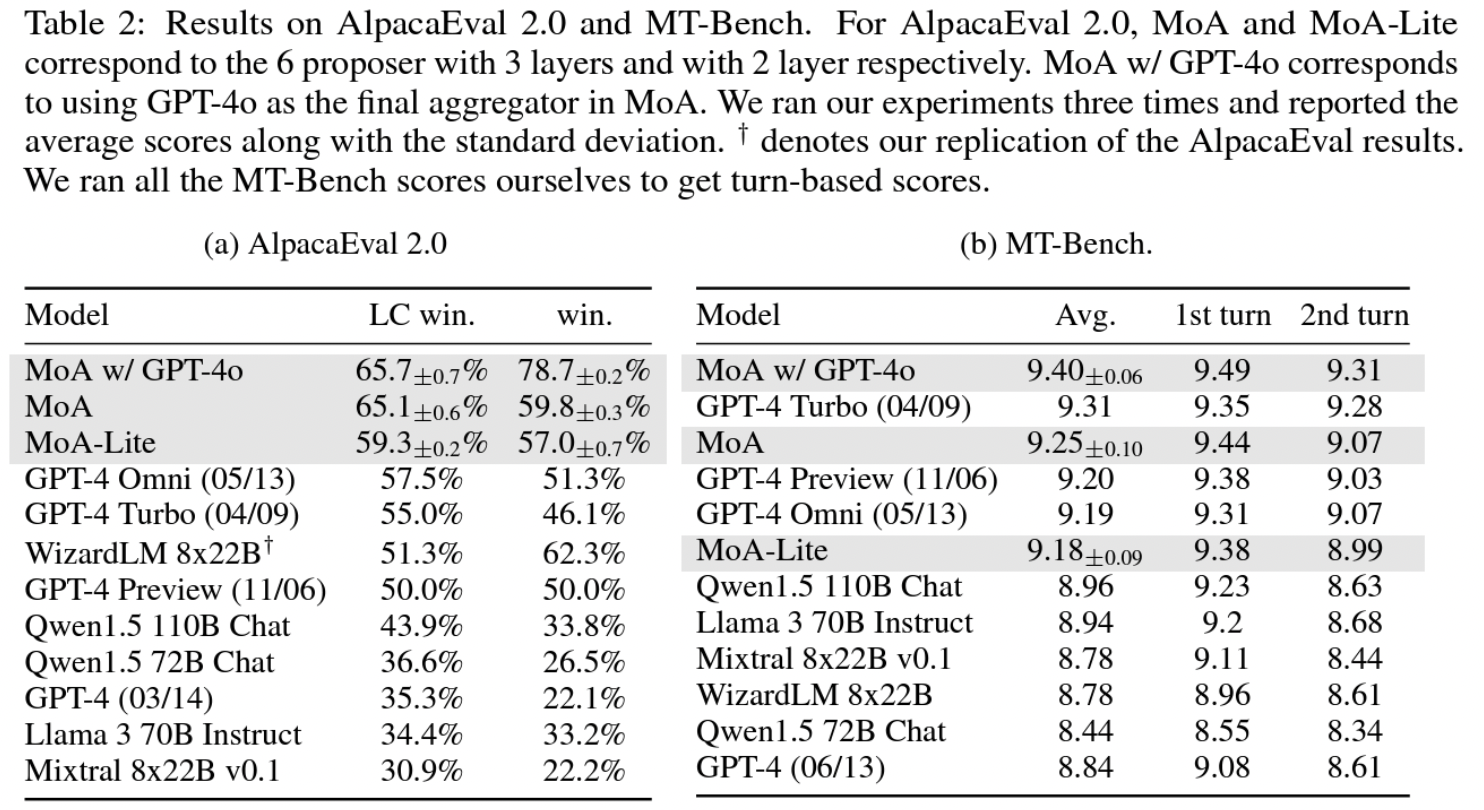

3. Experiments