Orca 2: Teaching Small Language Models How to Reason

Mitra, Arindam, et al. "Orca 2: Teaching small language models how to reason." arXiv preprint arXiv:2311.11045 (2023).

참고:

- https://aipapersacademy.com/orca-2/

- https://arxiv.org/pdf/2311.11045

Contents

- Orca 1 Recap

- Imitation learning

- Explanation tuning

- Orca 2 Improvements

- Use the right tool for the job

- Cautious reasoning

- Training Orca 2

- Experiments

1. Orca 1 Recap

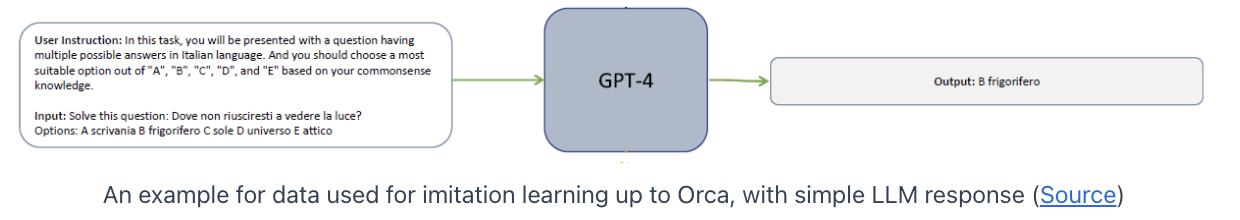

(1) Imitation learning

Fine-tuning the base model on a dataset,

wherethe dataset is created using responses from ChatGPT or GPT-4

(2) Explanation tuning

(Before Orca 1) Student models mostly learn to imitate the teacher model style, rather than its reasoning process

- \(\because\) Responses they use for fine-tuning were mostly simple and short

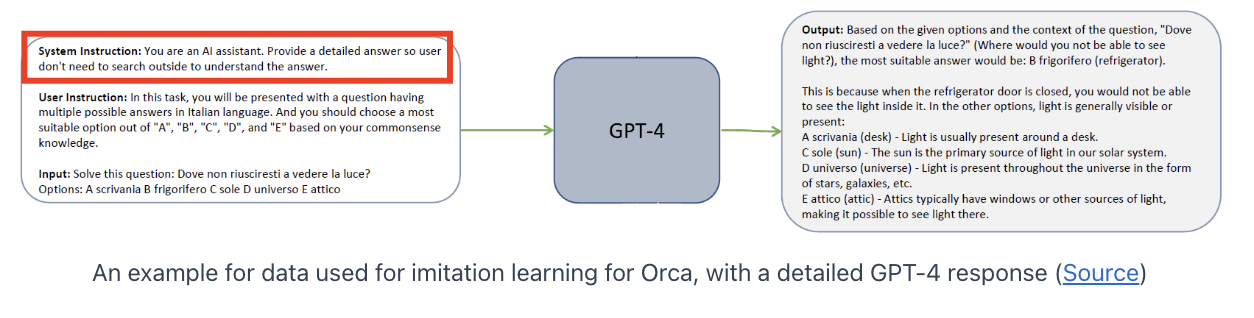

Orca 1: Explanation tuning

-

Student model learns the thought process of the teacher model

-

How is Orca 1 dataset constructed?

-

Add “system instruction”

\(\rightarrow\) Provides guidelines for GPT-4 regarding how it should generate the response

-

2. Orca 2 Improvements

Orca2: release the model weights

Two contributions

- Use the right tool for the job.

- Learn to use the right tool for the job ( = Cautious Reasoning )

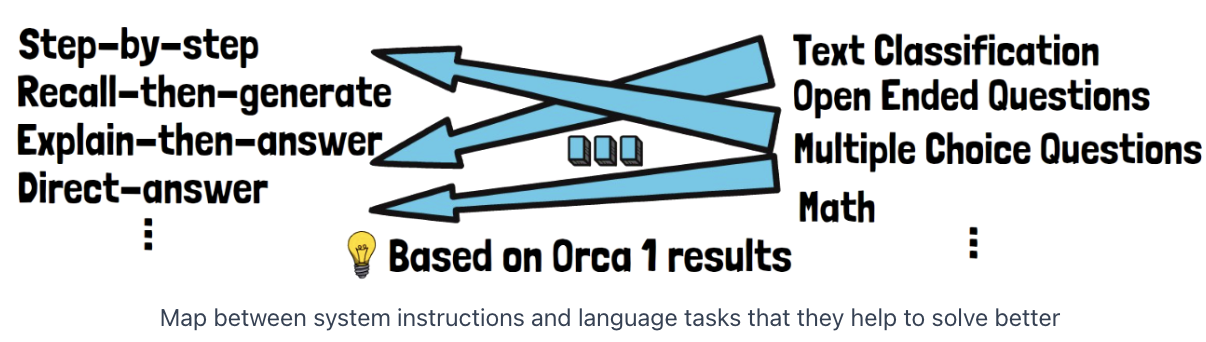

(1) Use the right tool for the job

There are various types of system instructions

- ex) step-by-step

- ex) recall-then-generate

- ex) explain-then-answer

- ex) direct-answer

- …

Each system instruction guides the model to use a specific solution strategy that helps the model to reach the correct answer.

Observation: Not every system instruction matches every user instruction!

Orca 2: Map properly between solution strategies and user-instruction types

\(\rightarrow\) The responses the model is trained on will be more accurate!

Then, how to assign?

\(\rightarrow\) Run Orca 1 on examples for a certain task type, and see which system instructions perform better on that task type.

(2) Cautious reasoning

Cautious reasoning

= The model learns to use the “right tool” for the job.

= Deciding which “solution strategy” to choose for a given task

Given a user instruction, Orca 2, as a cautious reasoner, should be able to choose the proper reasoning technique for the input instruction,

( even without a system instruction that will guide him to choose that strategy )

\(\rightarrow\) via Prompt Erasing

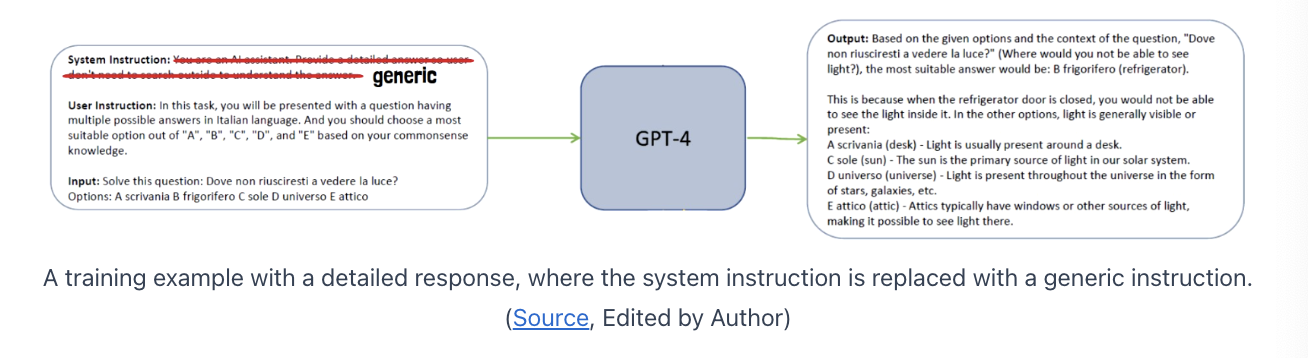

Prompt Erasing

-

(At training time) Replace the system instructions ** \(\rightarrow\) **generic system instruction.

-

By observing the response without the system instruction in training…

\(\rightarrow\) Orca 2 learns to decide which solution strategy to use for each task type

Generic System Instruction

You are Orca, an AI language model created by Microsoft. You are a cautious assistant. You carefully follow instructions. You are helpful and harmless and you follow ethical guidelines and promote positive behavior.

\(\rightarrow\) Does not contain specific details about how to generate the response!!

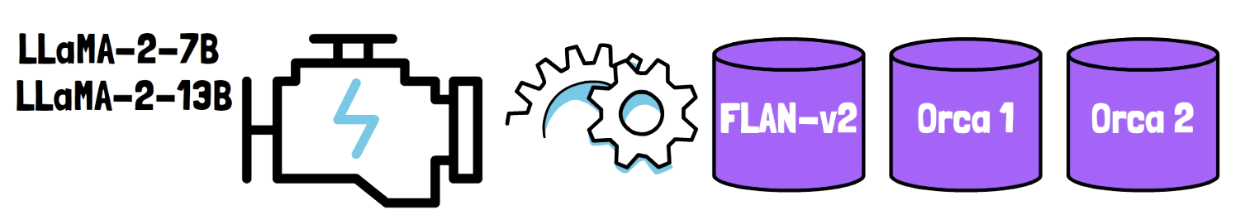

3. Training Orca 2

- Step 1) Start with the 7B and 13B LLaMA-2

- Step 2) Continue training on data from ..

- (1) FLAN-v2 dataset

- Dataset from the first Orca paper

- (2) New dataset

- Created for Orca 2, based on the two ideas we’ mentioned above

- (1) FLAN-v2 dataset

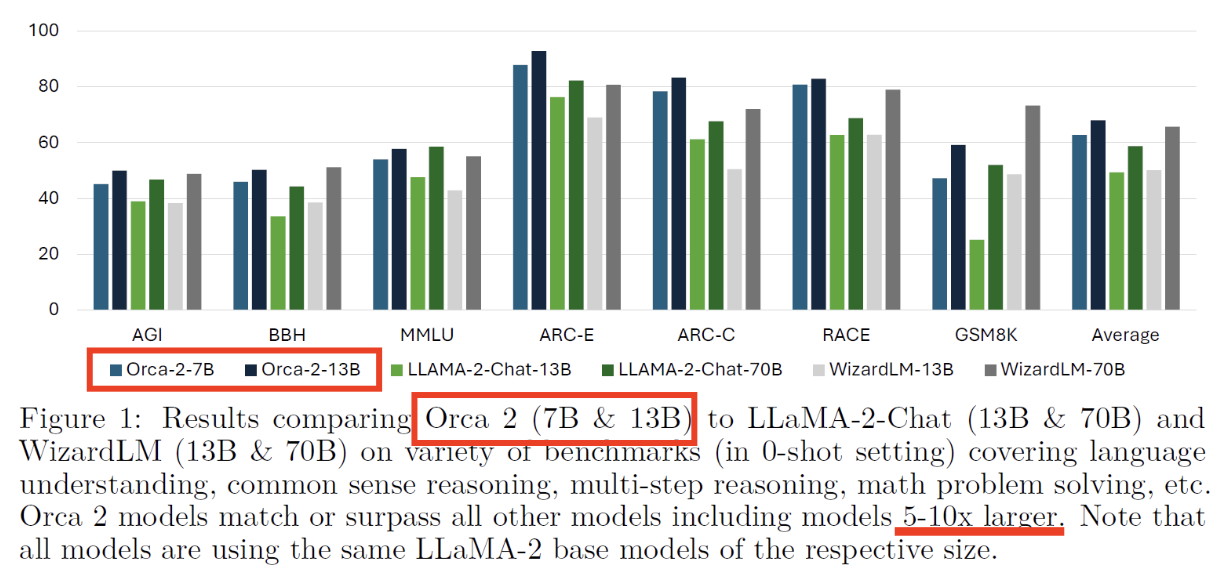

4. Experiments