5. K-means from EM perspective

You might have heard of, or already know about K-means clusteirng, which is the most widely known (hard) clustering method.

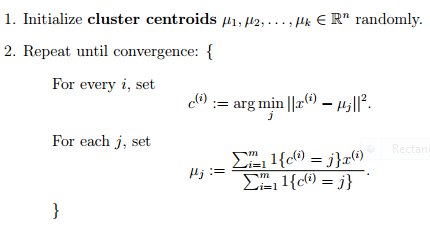

The algorithm of K-means is as below ( I will not explain about it )

https://stanford.edu/~cpiech/cs221/img/kmeansMath.png

Actually, K-means can also be interpreted with an EM algorithm. Can you notice from the pseudocode above?

a. From GMM to K-means

fix covariance to be identical

fix weights of the prior to be uniform

The, that’s all! If we make those two assumptions, the density of each data point, given that we know the cluster, will look like this.

b. E-step of K-means

q function of k-means is a delta function, like below.

c_i, which is a cluster for each data point i, is the c that makes the density of each data point the maximum.

But as you can see in ,

we can change c_i like the below.

This is exactly the same as the K-means algorithm!

c. M-step of K-means

remember, M-step of GMM was maximizing the following expression.

And after the optimization, we found out that mu_c is

In the equation above, let q function be a delta function

Then, we can get the following equation for mu_c, which is exactly the same as the k-means algorithm!

d. Summary

- K-means is actually EM for GMM, with fixed covariance matrices I

- It is a simplified E-step with delta function