Are Language Models Actually Useful for Time Series Forecasting?

Contents

-

Abstract

-

Introduction

-

Related Works

-

Experimental Setup

0. Abstract

LLMs are being applied to TS forecasting

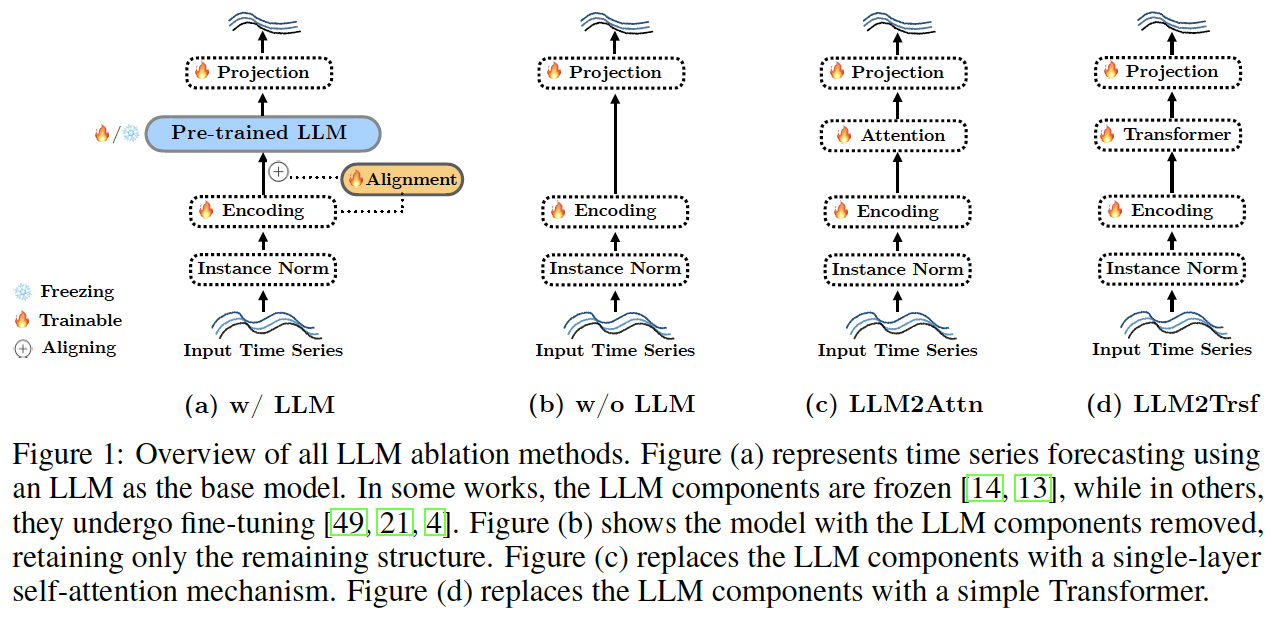

Ablation studies with 3 LLM-based TS forecasting models

\(\rightarrow\) Removing the LLM component ( or replacing with basic attention ) does not degrade performance!

Even do not perform better than models trained from scratch!

1. Introduction

Claim: Popular LLM-based time series forecasters perform the same or worse than basic LLM-free ablations, yet require orders of magnitude more compute!

Experiment

- 3 LLM-based forecasting methods

- 8 standard benchmark datasets + 5 datasets from MONASH

2. Related Work

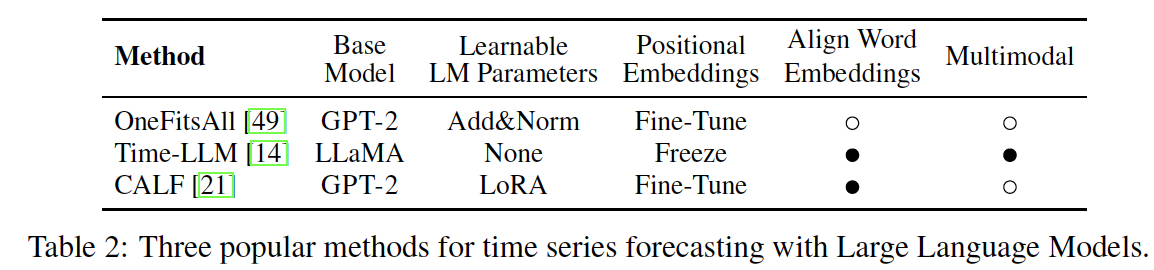

(1) TSF using LLMs

Chang et al., [5]

- Finetuning the certain modules in GPT-2

- To align pre-trained LLMs with TS data for forecasting tasks

Zhou et al. [49]

- Similar finetuning method, “OneFitAll”

- TS forecasting with GPT-2.

Jin et al. [14]

- Reprogramming method to align LLM’s Word Embedding with TS embeddings

- Good representation of TS data on LLaMA

(2) Encoders in LLM TS Models

LLM for text: need “work tokens” ( \(1 \times d\) vectors )

LLM for TS: need “TS tokens” ( \(1 \times d\) vectors )

(3) Small & Efficient Neural Forecasters

DLinear, FITS ….

3. Experimental Setup

(1) Reference Methods for LLM and TS

(2) Proposed Ablations

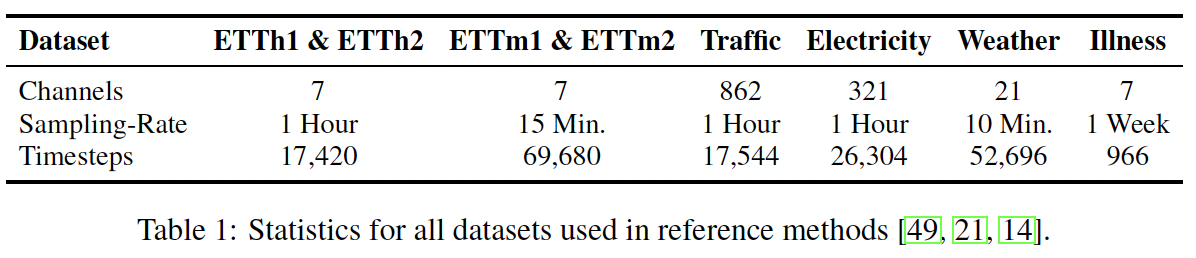

(3) Datasets