Overdispersed Black-Box Variational Inference (2016)

Abstract

method to reduce the variance of MC estimator of the gradient in BBVI

- sample from the variational distn (X)

- use importance sampling

Proposed method : readily applied to any exponential family distn

Over-dispersed Importance sampling scheme provides lower variance than BBVI

1. Introduction

(1) Generative Probabilistic Modeling

- data generating process, through a joint distn of “observed data” & “latent variable”

- use an inference algorithm to calculate/approximate the posterior

(2) Variational inference

-

( traditional VI ) use coordinate-ascent to optimize its objective

( recent innovations ) use stochastic optimization

-

Must address the problem with MC estimates of gradient, which is HIGH VARIANCE

Several strategies to overcome this

- 1) Rao-Blackwellization

- 2) Reparameterization

- 3) Local expectations

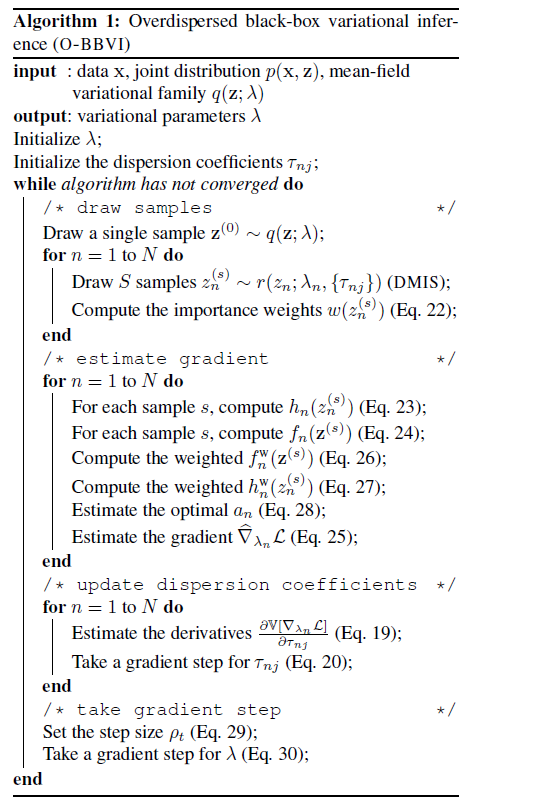

In this paper, suggests O-BBVI (Overdispersed BBVI)

-

new method for reducing the variance of MC gradients

-

main idea = “use IMPORTANCE SAMPLING” to estimate the gradient

( in order to construct a good proposal distn that is matched to the problem )

Technical summary

-

probabilistic model : \(p(x,z)\)

-

VI : use parameterized distn of latent variables \(q(z ; \lambda)\)

build on BBVI

-

\(L(\lambda)\) : variational objective ( =negative KL-div + constant )

gradient of \(L(\lambda)\) : \(\nabla_{\lambda} \mathcal{L}=\mathbb{E}_{q(\mathbf{z} ; \lambda)}[f(\mathbf{z})]\)

where \(f(\mathbf{z})=\nabla_{\lambda} \log q(\mathbf{z} ; \lambda)(\log p(\mathbf{x}, \mathbf{z})-\log q(\mathbf{z} ; \lambda))\)

-

Approximate the gradient with importance sampling!

Introduce a proposal distn : \(r(\mathbf{z} ; \lambda, \tau)\)

-

depends on both (1) variational param & (2) additional param

-

result : \(\nabla_{\lambda} \mathcal{L}=\mathbb{E}_{r(\mathbf{z} ; \lambda, \tau)}\left[f(\mathbf{z}) \frac{q(\mathbf{z} ; \lambda)}{r(\mathbf{z} ; \lambda, \tau)}\right]\).

2. Black-Box Variational Inference (BBVI)

variational family \(q(z ; \lambda)\)

-

\(q(\mathbf{z} ; \lambda)=g(\mathbf{z}) \exp \left\{\lambda^{\top} t(\mathbf{z})-A(\lambda)\right\}\).

- \(g(\mathbf{z})\) : base measure

- \(\lambda\) : natural parameters

- \(t(\mathbf{z})\) : sufficient statistics

- \(A(\lambda)\) : log normalizer

-

goal : minimize \(D_{\mathrm{KL}}(q(\mathbf{z} ; \lambda) \mid p(\mathbf{z} \mid \mathbf{x}))\)

( = maximize ELBO, \(\mathcal{L}(\lambda)=\mathbb{E}_{q(\mathbf{z} ; \lambda)}[\log p(\mathbf{x}, \mathbf{z})-\log q(\mathbf{z} ; \lambda)]\) )

with tractable variational family & conditionally conjugate model \(\rightarrow\) closed form!

But, not in real world…

BBVI :

-

uses “MC estimates of gradient” & requires few model-specific calculations

-

relies on log-derivative trick ( = REINFORCE or score function )

-

score function :

\(\begin{array}{l} \nabla_{\lambda} q(\mathbf{z} ; \lambda)=q(\mathbf{z} ; \lambda) \nabla_{\lambda} \log q(\mathbf{z} ; \lambda) \\ \mathbb{E}_{q(\mathbf{z} ; \lambda)}\left[\nabla_{\lambda} \log q(\mathbf{z} ; \lambda)\right]=0 \end{array}\).

-

BUT, it may have HIGH VARIANCE

-

uses 2 strategies :

- 1) control variates

- 2) Rao-Blackwellization

(1) Control Variates

Properties

- (1) r.v included in estimator

- (2) same expectation + reducing variance

- (3) many possible choices

Weighted Score function

-

not model dependent :)

-

each component \(n\) of the gradient in \(\nabla_{\lambda} \mathcal{L}=\mathbb{E}_{q(\mathbf{z} ; \lambda)}[f(\mathbf{z})]\)

can be rewritten as…..

\(\mathbb{E}_{q(\mathbf{z} ; \lambda)}\left[f_{n}(\mathbf{z})-a_{n} h_{n}(\mathbf{z})\right]\).

-

result )

\(a_{n}=\frac{\operatorname{Cov}\left(f_{n}(\mathbf{z}), h_{n}(\mathbf{z})\right)}{\operatorname{Var}\left(h_{n}(\mathbf{z})\right)}\).

In BBVI, separate set of samples from \(q(\mathbf{z} ; \lambda)\) are used to estimate \(a_n\)

(2) Rao-Blackwellization

-

reduce variance of r.v, by “replacing it with its conditional expectation”

-

In BBVI, each component of the gradient is Rao-Blackwellized with respect to variables outside of the Markov blanket of the involved hidden variable

-

MFVI ) \(q(\mathbf{z} ; \lambda)=\prod_{n} q\left(z_{n} ; \lambda_{n}\right)\)

\(\begin{aligned} \nabla_{\lambda_{n}} \mathcal{L}=\mathbb{E}_{q\left(\mathbf{z}_{(n)} ; \lambda_{(n)}\right)}[& \nabla_{\lambda_{n}} \log q\left(z_{n} ; \lambda_{n}\right) \left.\times\left(\log p_{n}\left(\mathrm{x}, \mathbf{z}_{(n)}\right)-\log q\left(z_{n} ; \lambda_{n}\right)\right)\right] \end{aligned}\).

3. Overdispersed Black-Box Variational Inference (O-BBVI)

O-BBVI : method for further reducing the variance

main idea : use IMPORTANCE SAMPLING

( does not sample from variational distribution \(q(\mathbf{z} ; \lambda)\) )

Takes samples from a proposal distn, \(r(\mathbf{z} ; \lambda, \tau)\)

-

importance weights = \(w(\mathbf{z})=q(\mathbf{z} ; \lambda) / r(\mathbf{z} ; \lambda, \tau)\)

\(\rightarrow\) resulting estimator is unbiased

Optimal Proposal

one that minimizes the variance of the estimator is not variational distribution \(q(\mathbf{z} ; \lambda)\) !

Rather, it is….

\(r_{n}^{\star}(\mathbf{z}) \propto q(\mathbf{z} ; \lambda) \mid f_{n}(\mathbf{z}) \mid\).

- but not tractable

- thus, in O-BBVI, build an alternative proposal based on overdispersed exponential families

Overdispersed Proposal

motivation : optimal distn \(r_{n}^{\star}(\mathbf{z}) \propto q(\mathbf{z} ; \lambda) \mid f_{n}(\mathbf{z}) \mid\) assigns higher probability density to the tails of \(q(\mathbf{z} ; \lambda)\)

Thus, design a proposal distn \(r(\mathbf{z} ; \lambda, \tau)\), that assigns higher mass to the tail!

\[r(\mathbf{z} ; \lambda, \tau)=g(\mathbf{z}, \tau) \exp \left\{\frac{\lambda^{\top} t(\mathbf{z})-A(\lambda)}{\tau}\right\}\]- where \(\tau \geq 1\) is the dispersion coefficient of the overdispersed distn

Then, the estimator of the gradient is …

\(\widehat{\nabla}_{\lambda}^{\mathrm{O}-\mathrm{BB}} \mathcal{L}=\frac{1}{S} \sum_{s} f\left(\mathbf{z}^{(s)}\right) \frac{q\left(\mathbf{z}^{(s)} ; \lambda\right)}{r\left(\mathbf{z}^{(s)}\right)}, \quad \mathbf{z}^{(s)} \stackrel{\text { iid }}{\sim} r(\mathbf{z} ; \lambda, \tau)\).

Desired properties of \(r(\mathbf{z} ; \lambda, \tau)\)

- 1) easy to sample from

- 2) adaptive

- 3) higher mass to the tails of \(q(\mathbf{z} ; \lambda)\)

Dispersion coefficient \(\tau\) can be itself adaptive to better match the optimal proposal at each iteration of variational optimization procedure.

3-1. Variance Reduction

O-BBVI vs BBVI

(1) BBVI

- \(\widehat{\nabla}_{\lambda}^{\mathrm{BB}} \mathcal{L}=\frac{1}{S} \sum_{s} f\left(\mathbf{z}^{(s)}\right), \quad \mathbf{z}^{(s)} \stackrel{\mathrm{iid}}{\sim} q(\mathbf{z} ; \lambda)\).

- \(\mathbb{V}\left[\widehat{\nabla}_{\lambda}^{\mathrm{BB}} \mathcal{L}\right]=\frac{1}{S} \mathbb{E}_{q(\mathbf{z} ; \lambda)}\left[f^{2}(\mathbf{z})\right]-\frac{1}{S}\left(\nabla_{\lambda} \mathcal{L}\right)^{2}\).

(2) O-BBVI

\(\begin{aligned} \mathbb{V}\left[\widehat{\nabla}_{\lambda}^{\mathrm{O}-\mathrm{BB}} \mathcal{L}\right] &=\frac{1}{S} \mathbb{E}_{r(\mathbf{z} ; \lambda, \tau)}\left[f^{2}(\mathbf{z}) \frac{q^{2}(\mathbf{z} ; \lambda)}{r^{2}(\mathbf{z} ; \lambda, \tau)}\right]-\frac{1}{S}\left(\nabla_{\lambda} \mathcal{L}\right)^{2} \\ &=\frac{1}{S} \mathbb{E}_{q(\mathbf{z} ; \lambda)}\left[f^{2}(\mathbf{z}) \frac{q(\mathbf{z} ; \lambda)}{r(\mathbf{z} ; \lambda, \tau)}\right]-\frac{1}{S}\left(\nabla_{\lambda} \mathcal{L}\right)^{2} \end{aligned}\).