Markovian Score Climbing ; Variational Inference with KL(p||q) ( NeurIPS 2020 )

Abstract

Modern Variational Inference (Modern VI)

- use stochastic gradients

- (traditionally) “exclusive” KL-divergence \(KL(q \mid \mid p)\)

- (recent) “inclusive” KL-divergence \(KL(p \mid \mid q)\)

Develops a simple algorithm for minimizing inclusive KL using stochastic gradients

method called “Markovian Score Climbing (MSC)” converges to local optimum of the inclusive KL

1. Introduction

VI : optimization-based… minimize KL-divergence

- exclusive KL : \(KL(q \mid \mid p)\)

- inclusive KL : \(KL(p \mid \mid q)\)

develop Markovian Score Climbing (MSC)

-

simple algorithm for minimizing inclusive KL-div

-

iteratively samples the Markvov chain \(\mathbf{z}[k]\)

& use samples to follow the score function \(\nabla \log q(\mathbf{z}[k])\)

Contributions

- 1) develop MSC

- 2) study systematic errors in existing methods

- 3) confirm convergence

2. Background

probabilistic model : \(p(z,x)\)

- latent variables \(z\) & data \(x\)

2-1. VI with \(KL(p \mid \mid q)\)

approximating distributions \(q(z ; \lambda)\)…

- goal : \(q(z ; \lambda) \approx p(z \mid x)\)

Most common VI : exclusive KL

- problem) the \(q\) ( optimized to minimize \(KL(q \mid \mid p)\) will UNDERESTIMATE the variance of posterior

- how to solve? use Inclusive KL

Inclusive KL

-

\(\mathrm{KL}(p(\mathrm{z} \mid \mathrm{x}) \| q(\mathrm{z} ; \lambda)):=\mathbb{E}_{p(\mathrm{z} \mid \mathrm{x})}[\log p(\mathrm{z} \mid \mathrm{x})-\log q(\mathrm{z} ; \lambda)]\) (eq 1)

-

minimizing (eq 1) = minimizing cross entropy \(L_{\mathrm{KL}}(\lambda)\)

\(\min _{\lambda} L_{\mathrm{KL}}(\lambda):=\min _{\lambda} \mathbb{E}_{p(\mathrm{z} \mid \mathrm{x})}[-\log q(\mathrm{z} ; \lambda)]\) (eq 2)

-

gradient of (eq 2) :

\(g_{\mathrm{KL}}(\lambda):=\nabla L_{\mathrm{KL}}(\lambda)=\mathbb{E}_{p(\mathrm{z} \mid \mathrm{x})}[-s(\mathrm{z} ; \lambda)]\).

where \(s(\mathbf{z} ; \lambda)\) is score function, \(s(\mathbf{z} ; \lambda):=\nabla_{\lambda} \log q(\mathbf{z} ; \lambda)\)

use SGD(Stochastic Gradient Descent) to solve (eq 2)

2-2. SGD with IS(Important Sampling)

SGD update : \(\lambda_{k}=\lambda_{k-1}-\varepsilon_{k} \widehat{g}_{\mathrm{KL}}\left(\lambda_{k-1}\right)\)

- converges to local optimum …..if

- condition 1) \(\mathbb{E}\left[\widehat{g}_{\mathrm{KL}}(\lambda)\right]=g_{\mathrm{KL}}(\lambda)\)

- condition 2) \(\sum_{k} \varepsilon_{k}^{2}<\infty, \sum_{k} \varepsilon_{k}=\infty\)

When the objective is the “exclusive \(KL(q \mid \mid p)\)“…. use

- 1) score-function gradient estimators

- 2) reparameterization gradient estimators

- 3) combination of 1) & 2)

But we are considering “inclusive \(KL(p \mid \mid q)\)”

-

gradient estimation is difficult…….

-

requires expectation w.r.t posterior \(p\)

-

ex) use IMPORTANCE SAMPLING (IS)

\(\nabla_{\lambda} L_{\mathrm{KL}}(\lambda) \propto-\mathbb{E}_{q(z ; \lambda)}\left[\frac{p(\mathrm{z}, \mathrm{x})}{q(\mathrm{z} ; \lambda)} s(\mathbf{z} ; \lambda)\right]\).

-

exx2) self-normalized IS

\(\nabla_{\lambda} L_{\mathrm{KL}}(\lambda) \approx-\sum_{s=1}^{S} \frac{w_{s}}{\sum_{r=1}^{S} w_{r}} s\left(\mathbf{z}^{s} ; \lambda\right)\) ,where

- \(w_{s}=p\left(z^{s}, \mathrm{x}\right) / q\left(z^{s} ; \lambda\right)\).

- \(\mathbf{z}^{s} \sim q\left(\mathbf{z}^{s} ; \lambda\right)\).

- \(s(\mathbf{z} ; \lambda)=\nabla_{\lambda} \log q(\mathbf{z} ; \lambda)\).

This is NOT unbiased….systematic error!

\(\rightarrow\) MSC addresses this shortcoming!

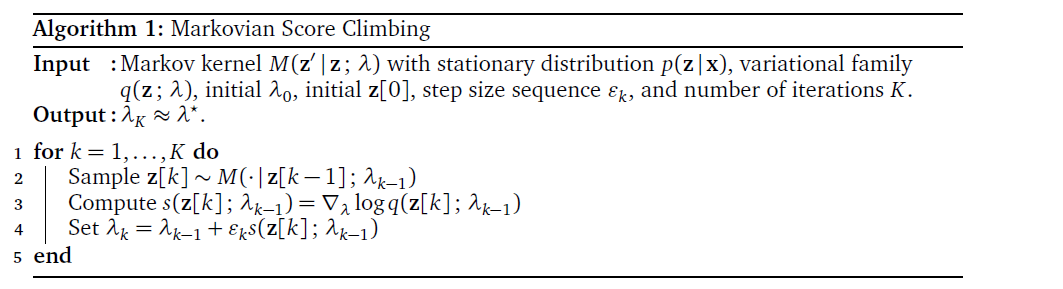

3. Markovian Score Climbing

Key Idea : use MCMC to estimate INTRACTABLE gradient

- under certain conditions, converge to local optimum of \(KL(p \mid \mid q)\)

3-1. SGD using MCMC

when using gradient descent to optimize inclusive KL…

\(\rightarrow\) must compute expectation of score function

( but intractable… propose to use Stochastic gradients )

MSC (Markovian Score Climbing)

- sample \(z[k-1]\) ( used to estimate gradient at step \(k-1\) ) is passed to Markov kernel \(\mathbf{z}[k] \sim M(\cdot \mid \mathbf{z}[k-1])\)

- move in an ascent direction of the score function \(s(\mathbf{z}[k] ; \lambda)\) (at each iteration)

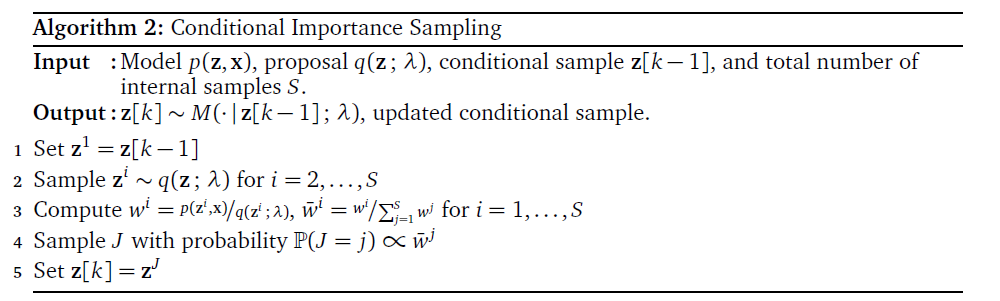

3-2. Conditional Importance Sampling

CIS = IS-based Markov Kernel

- stationary distribution : \(p(z \mid x)\)

- difference with classical IS?

- retain one of the samples from previous iteration ( = conditional sample )

3 steps of Iteration :

- step 1) generate new samples from proposal

- step 2) compute weights

- step 3) update the conditional sample for the next iteration

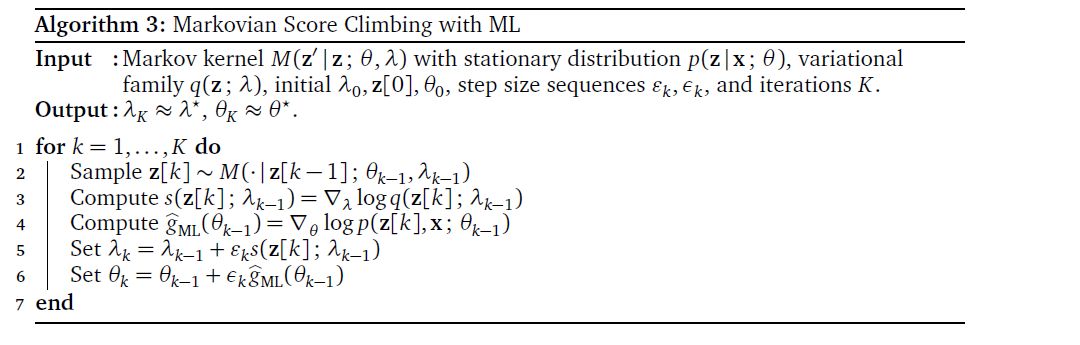

3-3. Model Parameters

unknown parameters \(\theta\)

-

solution : assign prior & include them in the latent variable \(z\)

& use methods above!

Markovian Score Climbing, based on Fisher identity of the gradient

- \(g_{\mathrm{ML}}(\theta)=\nabla_{\theta} \log p(\mathbf{x} ; \theta)=\nabla_{\theta} \log \int p(\mathbf{z}, \mathbf{x} ; \theta) \mathrm{d} \mathbf{z}=\mathbb{E}_{p_{\theta}(\mathbf{z} \mid \mathbf{x})}\left[\nabla_{\theta} \log p(\mathbf{z}, \mathbf{x} ; \theta)\right]\).