05. Transfer Learning - Fine Tuning

(참고) udemy - TF Developer in 2022

Contents

- Fine Tuning 한 장 요약

- Model 0

- Data Augmentation을 모델에 넣기

- Model 1

- Model 2

- Model checkpoint

- Model 3 & 4

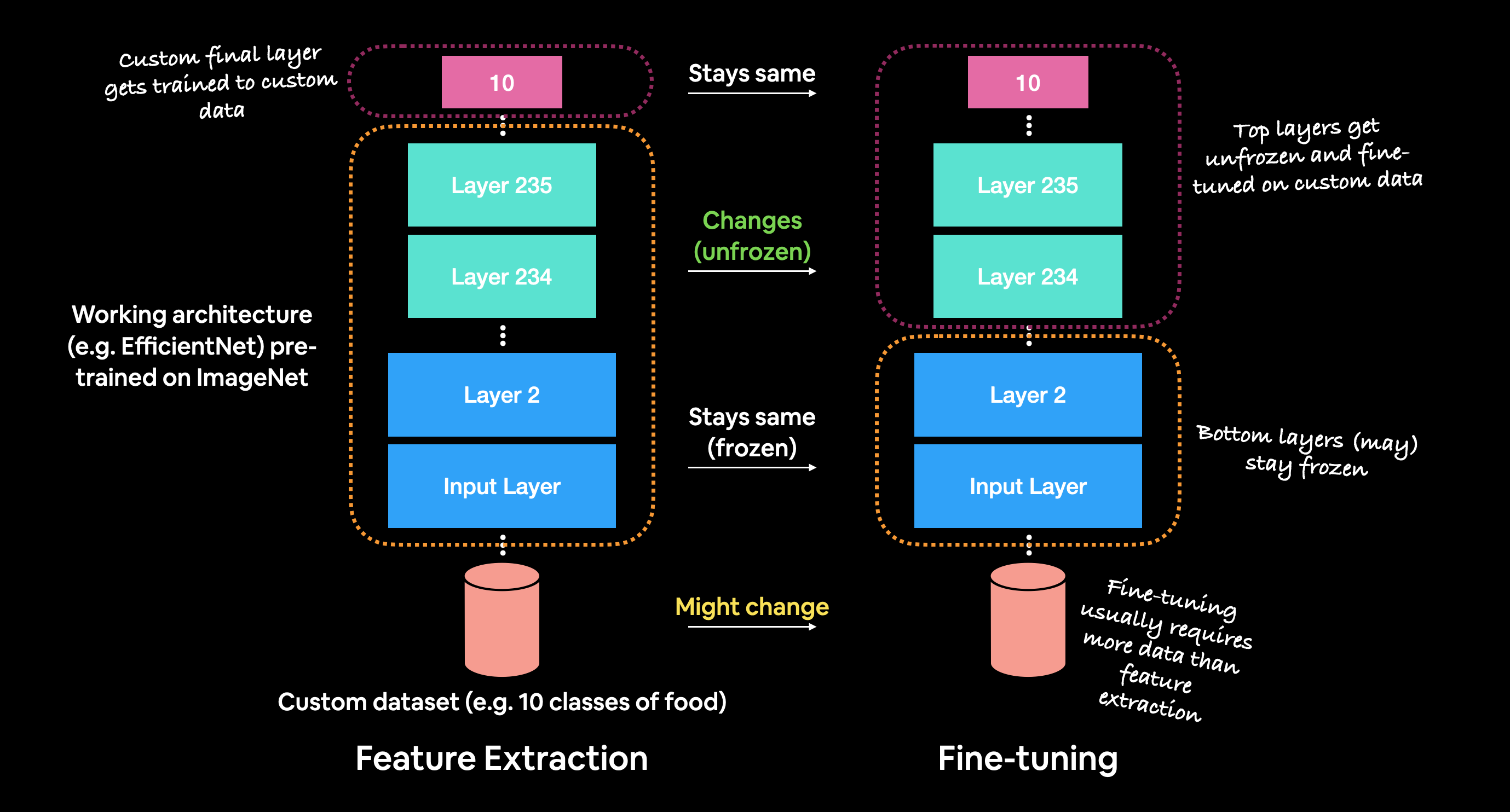

(1) Fine Tuning 한 장 요약

다양한 모델 생성

- Model 0: a transfer learning model using the Keras Functional API

- Model 1: a feature extraction transfer learning model on 1% of the data with data augmentation

- Model 2: a feature extraction transfer learning model on 10% of the data with data augmentation

- Model 3: a fine-tuned transfer learning model on 10% of the data

- Model 4: a fine-tuned transfer learning model on 100% of the data

(2) Model 0

Model 0: a transfer learning model using the Keras Functional API

- Data 불러오기

IMG_SIZE = (224, 224) # define image size

train_data_10_percent = tf.keras.preprocessing.image_dataset_from_directory(directory=train_dir,

image_size=IMG_SIZE,

label_mode="categorical",

batch_size=32)

- Base Model 불러오기

- trainable=False : FE 부분 전부 freeze할 것

- include_top=False : custom output layer 할 것

base_model = tf.keras.applications.EfficientNetB0(include_top=False)

base_model.trainable = False

- layer 쌓기

inputs = tf.keras.layers.Input(shape=(224, 224, 3), name="input_layer")

#----------------------------------------------------------------------#

x = base_model(inputs)

x = tf.keras.layers.GlobalAveragePooling2D(name="global_average_pooling_layer")(x)

#----------------------------------------------------------------------#

outputs = tf.keras.layers.Dense(10, activation="softmax", name="output_layer")(x)

- 모델 생성 & 학습

tf.keras.Model를 사용하여, input & output을 지정해줄 수 있다.

model_0 = tf.keras.Model(inputs, outputs)

model_0.compile(loss='categorical_crossentropy',

optimizer=tf.keras.optimizers.Adam(),

metrics=["accuracy"])

history_10_percent = model_0.fit(train_data_10_percent,

epochs=5,

steps_per_epoch=len(train_data_10_percent),

validation_data=test_data_10_percent,

validation_steps=int(0.25 * len(test_data_10_percent)),

callbacks=[create_tensorboard_callback("transfer_learning",

"10_percent_feature_extract")])

- 모델 summary 확인하기

model_0.summary()

Model: "model"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_layer (InputLayer) [(None, 224, 224, 3)] 0

_________________________________________________________________

efficientnetb0 (Functional) (None, None, None, 1280) 4049571

_________________________________________________________________

global_average_pooling_layer (None, 1280) 0

_________________________________________________________________

output_layer (Dense) (None, 10) 12810

=================================================================

Total params: 4,062,381

Trainable params: 12,810

Non-trainable params: 4,049,571

_________________________________________________________________

참고 : Global Average Pooling 이후 shape의 변화 :

-

input : (1, 4, 4, 3)

-

output : (1, 3)

아래의 둘은 동일하다!

tf.reduce_mean(input_tensor, axis=[1, 2])

tf.keras.layers.GlobalAveragePooling2D()(input_tensor)

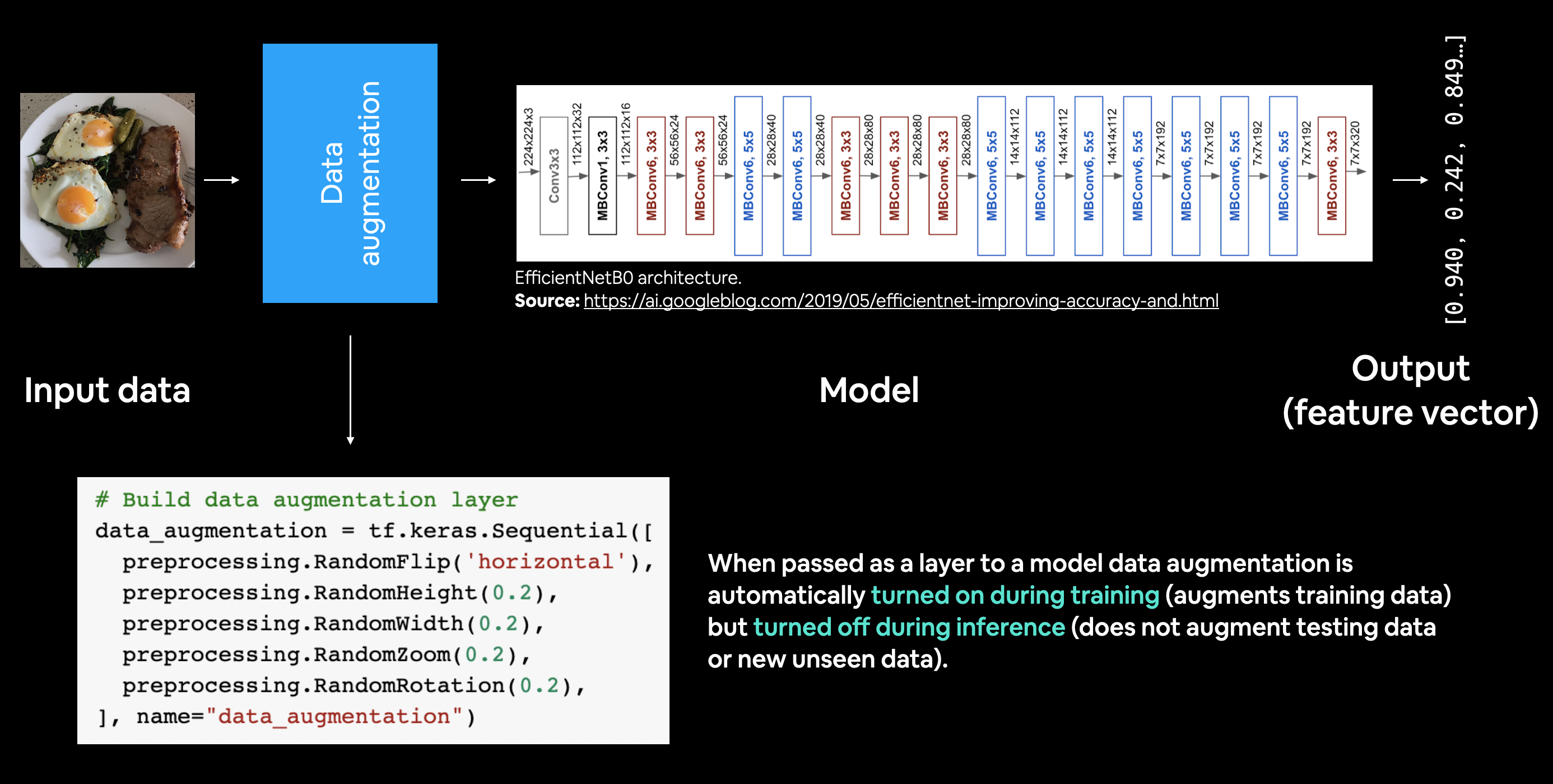

(3) Data Augmentation을 모델에 넣기

( ImageDataGenerator 클래스를 사용하지 않고 )

tf.keras.layers.experimental.preprocessing 모듈을 사용해서!

import tensorflow as tf

from tensorflow.keras import layers

from tensorflow.keras.layers.experimental import preprocessing

data_augmentation = tf.keras.Sequential([

preprocessing.RandomFlip("horizontal"),

preprocessing.RandomRotation(0.2),

preprocessing.RandomZoom(0.2),

preprocessing.RandomHeight(0.2),

preprocessing.RandomWidth(0.2),

], name ="data_augmentation")

(4) Model 1

Model 1: a feature extraction transfer learning model on 1% of the data with data augmentation

- Data 불러오기

IMG_SIZE = (224, 224)

train_data_1_percent = tf.keras.preprocessing.image_dataset_from_directory(train_dir_1_percent,

label_mode="categorical",

batch_size=32,

image_size=IMG_SIZE)

- Base Model 불러오기

base_model = tf.keras.applications.EfficientNetB0(include_top=False)

base_model.trainable = False

- layer 쌓기

input_shape = (224, 224, 3)

inputs = layers.Input(shape=input_shape, name="input_layer")

#----------------------------------------------------------------------#

x = data_augmentation(inputs)

x = base_model(x, training=False)

x = layers.GlobalAveragePooling2D(name="global_average_pooling_layer")(x)

#----------------------------------------------------------------------#

outputs = layers.Dense(10, activation="softmax", name="output_layer")(x)

- 모델 생성 & 학습

model_1 = keras.Model(inputs, outputs)

model_1.compile(loss="categorical_crossentropy",

optimizer=tf.keras.optimizers.Adam(),

metrics=["accuracy"])

history_1_percent = model_1.fit(train_data_1_percent,

epochs=5,

steps_per_epoch=len(train_data_1_percent),

validation_data=test_data,

validation_steps=int(0.25* len(test_data)),

callbacks=[create_tensorboard_callback("transfer_learning", "1_percent_data_aug")])

(4) Model 2

Model 2: a feature extraction transfer learning model on 10% of the data with data augmentation

- 데이터 불러오기

IMG_SIZE = (224, 224)

train_data_10_percent = tf.keras.preprocessing.image_dataset_from_directory(train_dir_10_percent,

label_mode="categorical",

image_size=IMG_SIZE)

나머지는 위의 (3) Model 1 과 동일

(5) Model Checkpoint

checkpoint_path = "ten_percent_model_checkpoints_weights/checkpoint.ckpt"

checkpoint_callback = tf.keras.callbacks.ModelCheckpoint(filepath=checkpoint_path,

save_weights_only=True,

save_best_only=False,

save_freq="epoch", # save every epoch

verbose=1)

이렇게 생성한 checkpoint를 callback안에 넣어준다.

(5) Model 3 & 4

base_model.trainable = True

for layer in base_model.layers[:-10]:

layer.trainable = False

이 외에는 전부 동일!