[Paper Review] 18. Closed-Form Factorization of Latent Semantics in GANs

Contents

- Abstract

- Introduction

- SeFa

- Preliminaries

- Unsupervised Semantic Factorization

0. Abstract

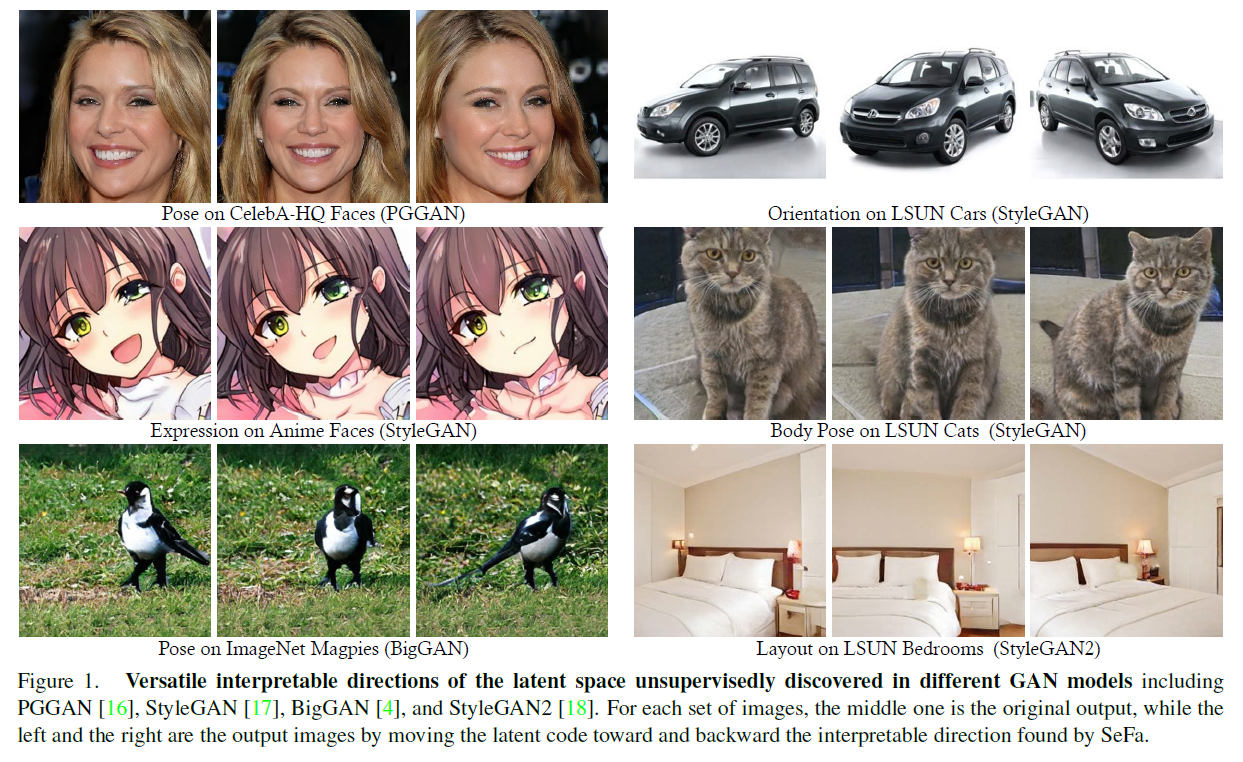

interpretable dimensions in GAN

to identify such latent dimensions for image editing….

-

previous methods :

- 1) annotate a collection of synthesized samples

- 2) train linear classifiers in latent space

BUT…. require clear definition of target attribute & manual annotations!

This paper…

-

examine INTERNAL representation learned by GANS

\(\rightarrow\) reveal the underlying variation factors in an unsupervised manner

-

propose a closed-form factorization for latent semantic discovery

- by directly decomposing the pre-trained weights

2. SeFa

SeFa

- closed-form method

- to discover the latent interpretable directions in GANS

- can identify semantically meaningful directions in latent space efficiently, by decomposing model weights

(1) Preliminaries

a) Generation Mechanism of GANs

image generation : \(\mathbf{I}=G(\mathbf{z})\)

\(G(\cdot)\) : generator

-

projects the starting latent space to the final image space “step by step”

-

can be formulated as affine transformation

-

\(G_{1}(\mathbf{z}) \triangleq \mathbf{y}=\mathbf{A z}+\mathbf{b}\).

( = first transformation step )

b) Manipulation Model in GAN Latent Space

Latent Space of GANs

- encode rich semantic knowledge

Semantics

-

can be further applied to image editing

-

use a certain direction \(\mathbf{n} \in \mathbb{R}^{d}\) in the latent space, to represent certain semantic concept

-

after identifying semantically meaningful direction…

manipulation : \(\operatorname{edit}(G(\mathbf{z}))=G\left(\mathbf{z}^{\prime}\right)=G(\mathbf{z}+\alpha \mathbf{n})\)

(2) Unsupervised Semantic Factorization

Goal : reveal EXPLANATORY factors from latent space of GANs

Ex) First projection step

-

manipulation model can be simplified as…

\(\mathbf{y}^{\prime} \triangleq G_{1}\left(\mathbf{z}^{\prime}\right) =G_{1}(\mathbf{z}+\alpha \mathbf{n}) =\mathbf{A z}+\mathbf{b}+\alpha \mathbf{A n}=\mathbf{y}+\alpha \mathbf{A} \mathbf{n}\).

- instance independent

-

weight parameter \(\mathbf{A}\) should contain knowledge of image variation

\(\rightarrow\) \(\therefore\) aim to discover important latent directions, by DECOMPOSING \(\mathbf{A}\)

Propose an UNSUPERVISED approach for semantic factorization

( = independent of data sampling & model training )

-

by solving \(\mathbf{n}^{*}=\underset{\left\{\mathbf{n} \in \mathbb{R}^{d}: \mathbf{n}^{T} \mathbf{n}=1\right\}}{\arg \max } \mid \mid \mathbf{A n} \mid \mid _{2}^{2}\)

-

aims to find the direction that cause LARGE variations after projection of \(\mathbf{A}\)

-

ex) if some direction \(\mathbf{n}^{\prime}\) is projected to a zero-norm vector ( \(\mathbf{A n}^{\prime}=\mathbf{0}\) )…

\(\rightarrow\) \(\mathbf{y}^{\prime}=\mathbf{y}\) ( unchanged! )

-

-

Finding \(k\) most important directions \(\left\{\mathbf{n}_{1}, \mathbf{n}_{2}, \cdots, \mathbf{n}_{k}\right\}\)

-

\(k=1\) : \(\mathbf{n}^{*}=\underset{\left\{\mathbf{n} \in \mathbb{R}^{d}: \mathbf{n}^{T} \mathbf{n}=1\right\}}{\arg \max } \mid \mid \mathbf{A n} \mid \mid _{2}^{2}\)

-

\(k=k\) : \(\mathbf{N}^{*}=\underset{\{\mathbf{N} \in \mathbb{R}^{d \times k}: \mathbf{n}_{i}^{T} \mathbf{n}_{i}=1 \forall i=1, \cdots, k\}}{\arg \max } \sum_{i=1}^{k} \mid \mid \mathbf{A} \mathbf{n}_{i} \mid \mid _{2}^{2}\)

-

solution : \(2 \mathbf{A}^{T} \mathbf{A n}_{i}-2 \lambda_{i} \mathbf{n}_{i}=0\)

-

All possible solutions : eigenvectors of \(\mathbf{A}^{T} \mathbf{A}\)

-

to get maximum objective value & make \(\left\{\mathbf{n}_{i}\right\}_{i=1}^{k}\) distinguishable…

choose columns of \(\mathbf{N}\) as eigenvectors of \(\mathbf{A}^{T} \mathbf{A}\) associated with the \(k\) largest eigenvalues

-

-