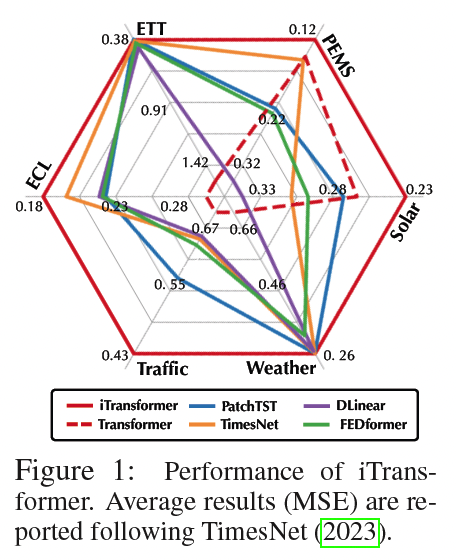

iTransformer: Inverted Transformers are Effective for Time Series Forecasting

Contents

- Abstract

Abstract

Predicting multivariate time series is crucial, demanding

precise modeling of intricate patterns, including

inter-series dependencies and intra-series

variations. Distinctive trend characteristics in

each time series pose challenges, and existing

methods, relying on basic moving average kernels,

may struggle with the non-linear structure

and complex trends in real-world data. Given that,

we introduce a learnable decomposition strategy

to capture dynamic trend information more reasonably.

Additionally, we propose a dual attention

module tailored to capture inter-series dependencies

and intra-series variations simultaneously

for better time series forecasting, which is implemented

by channel-wise self-attention and autoregressive

self-attention. To evaluate the effectiveness

of our method, we conducted experiments

across eight open-source datasets and compared

it with the state-of-the-art methods. Through

the comparison results, our Leddam (LEarnable

Decomposition and Dual Attention Module) not

only demonstrates significant advancements in

predictive performance but also the proposed decomposition

strategy can be plugged into other

methods with a large performance-boosting, from

11.87% to 48.56% MSE error degradation.