Adaptive GCN (2018, 406)

Contents

- Abstract

- Introduction

- Method

- SGC-LL Layer

- AGCN Network

Abstract

GCN : generalization of classical CNNs to handle graph data

Most of data’s graph structures varies in both data size & connectivity

This paper proposes…

\(\rightarrow\) a generalized & flexible graph CNN, taking data of arbitrary graph structure as input

-

task-driven adaptive graph

-

to efficiently learn the graph, a distance metric learning is proposed

1. Introduction

previous GCN : inital graph structure is FIXED

Bottleneck of current GCN :

- restrict graph degree

- require identical graph structure shared among inputs

-

fixed graph ( without training )

- incapable of learning from topological structure

Proposal

propose a novel spectral GCN, that feed on original data of DIVERSE graph structures

train a “residual graph”, to discover the residual sub-structures that the intrinsic graph never includes

Direct leraning of Laplacian : \(O(N^2)\)

But, if we use supervised metric learning with Mahalanobis distance,

\(\rightarrow\) can reduce to \(O(d^2)\) ( or even \(O(d)\), assuming metric parameters are shared across samples )

Also, propose a re-parameterization on the feature domain

Summary

- construct unique graph Laplacian

- unique residual Laplacian matrix

- added on the initial adjacency matrix

- Learn a distance metric for graph update

- topological structures of graph are updated

- Feature embedding in convolution

- Accept flexible graph inputs

2. Method

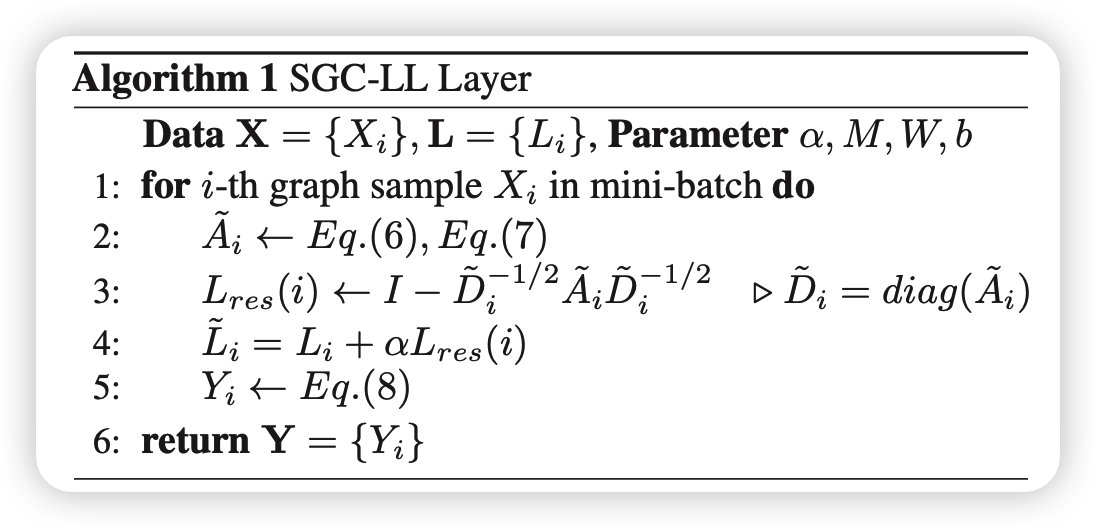

(1) SGC-LL Layer

parameterize the distance metrics

SGC-LL Layer ( Specral Graph Convolution layer with Graph Laplacian Learning )

- convolution with \(K\)-localized spectral filter, constructed on adaptive graph

a) Learning Graph Laplacian

Normalized graph Laplacian matrix :

-

\(L=I-D^{-1 / 2} A D^{-1 / 2}\).

- determines node-wise connectivity and the degree of vertices

- knowing \(L\) = knowing topological structure of \(G\)

Eigen decomposition

- Eigen vectors \(U\), formed by \(\left\{u_{s}\right\}_{s=0}^{N-1}, N\)

- use \(U\) as graph Fourier basis..

- graph Laplacian : diagonalized as \(L=U \Lambda U^{T}\)

- graph Fourier Transform : \(\hat{x}=U^{T} x\)

- spectral representation of graph topologi is \(\Lambda\)

- spectral filter : \(g_{\theta}(\Lambda)\)

- generates customized convolution kernel

- spectral filter : \(g_{\theta}(\Lambda)\)

( Previous Works )

Formulate \(g_{\theta}(\Lambda)\) as polynomial :

- \[g_{\theta}(\Lambda)=\sum_{k=0}^{K-1} \theta_{k} \Lambda^{k}\]

-

\(K\) localized kernel

-

parameterization by \(\theta_{k}\) …

\(\rightarrow\) restricts the flexibility of kernel

propose a NEW SPECTRAL FILTER

- Parameterizes \(L\), instead of \(\theta_{k}\)

- \(g_{\theta}(\Lambda)=\sum_{k=0}^{K-1}(\mathcal{F}(L, X, \Gamma))^{k}\).

- \(\mathcal{F}(L, X, \Gamma)\) outputs the spectrum of updated \(\tilde{L}\)

- SGC-LL layer :

- \(Y=U g_{\theta}(\Lambda) U^{T} X=U \sum_{k=0}^{K-1}(\mathcal{F}(L, X, \Gamma))^{k} U^{T} X\).

b) Training Metric for Graph Update

Generalized Mahalanobis distance :

- \(\mathbb{D}\left(x_{i}, x_{j}\right)=\sqrt{\left(x_{i}-x_{j}\right)^{T} M\left(x_{i}-x_{j}\right)}\).

with distance, calculate Gaussian kernel

- \(\mathbb{G}_{x_{i}, x_{j}}=\exp \left(-\mathbb{D}\left(x_{i}, x_{j}\right) /\left(2 \sigma^{2}\right)\right)\).

Normalize \(\mathbb{G}\) \(\rightarrow\) obtain DENSE adjacency matrix \(\hat{A}\)

c) Re-parameterization on feature Transform

CNN : output feature of conv layer = sum of all feature maps

However, on GCN, it is not explainable to create / train separate topological structure for different vertext features on the same graph

\(\rightarrow\) introduce a transform matrix & bias vector

\(Y=\left(U g_{\theta}(\Lambda) U^{T} X\right) W+b\).

d) Residual Graph Laplacian

no prior knowledge on distance metric….

in order to accelerate training…

assume that the optimal graph Laplacian is a “small shifting from the originla graph Laplacian”

- \(\hat{L}=L+\alpha L_{r e s}\).

(2) AGCN Network

SGC-LL layer + graph max pooling + graph gather

-

graph max pooling : feature-wise

- \(\hat{x}_{v}(j)=\max \left(\left\{x_{v}(j), x_{i}(j), \forall i \in N(v)\right\}\right)\).

-

graph gather

- element-wise sums up all the vertex feature vectors, as the representaiton of grpah data