MTGNN 코드 리뷰

( 논문 리뷰 : https://seunghan96.github.io/ts/gnn/ts41/ )

from layer import *

class gtnet(nn.Module):

def __init__(self, gcn_true, buildA_true, gcn_depth, num_nodes, device,

predefined_A=None, static_feat=None,

dropout=0.3, subgraph_size=20, node_dim=40,

dilation_exponential=1, conv_channels=32, residual_channels=32, skip_channels=64, end_channels=128,

seq_length=12, in_dim=2, out_dim=12, layers=3,

propalpha=0.05, tanhalpha=3, layer_norm_affline=True):

super(gtnet, self).__init__()

self.gcn_true = gcn_true

#==========================================================================================#

# [ 0. Basics ]

self.num_nodes = num_nodes # 시계열의 개수 ( = number of nodes )

self.idx = torch.arange(self.num_nodes).to(device) # 시계열 index ( = indicies of nodes )

self.layers = layers # layer 개수

self.dropout = dropout

self.seq_length = seq_length

self.start_conv = nn.Conv2d(in_channels=in_dim,

out_channels=residual_channels,

kernel_size=(1, 1)) # input 데이터를 가장먼저 latent space로 임베딩

# receptive field

kernel_size = 7

if dilation_exponential>1:

self.receptive_field = int(1+(kernel_size-1)*(dilation_exponential**layers-1)/(dilation_exponential-1))

else:

self.receptive_field = layers*(kernel_size-1) + 1

#==========================================================================================#

# [ 1. Graph Learning ]

self.buildA_true = buildA_true # A = adjacency matrix ( 구조를 learn할지, predefine할지 )

self.predefined_A = predefined_A

self.gc = graph_constructor(num_nodes, subgraph_size, node_dim, device, alpha=tanhalpha, static_feat=static_feat)

#==========================================================================================#

# [ 2. Temporal Convolution ]

self.filter_convs = nn.ModuleList() # Dilated Inception Layer들이 담길 곳 (1) -> value 역할

self.gate_convs = nn.ModuleList() # Dilated Inception Layer들이 담길 곳 (2) -> gate

self.residual_convs = nn.ModuleList() # GCN 안 쓸 경우의 CNN

self.skip_convs = nn.ModuleList() # skipconnection 하기 전에 shape 맞춰줄 1x1 conv

#==========================================================================================#

# [ 3. Graph Convolution ]

self.gconv1 = nn.ModuleList() # GCN (1) ... mixhop-prop으로 구성

self.gconv2 = nn.ModuleList() # GCN (2) ... mixhop-prop으로 구성

self.norm = nn.ModuleList() # GCN 통과 후, Layer Normalization 할지

#==========================================================================================#

# [ 4. 모듈 추가하기 ]

for i in range(1):

#----------------------------------------------------------------------------------------#

if dilation_exponential>1:

rf_size_i = int(1 + i*(kernel_size-1)*(dilation_exponential**layers-1)/(dilation_exponential-1))

else:

rf_size_i = i*layers*(kernel_size-1)+1

#----------------------------------------------------------------------------------------#

## layer 개수 만큼, 모듈들 추가하기

new_dilation = 1

for j in range(1,layers+1):

#----------------------------------------------------------------------------------------#

## receptive field 크기 정하기

if dilation_exponential > 1:

rf_size_j = int(rf_size_i + (kernel_size-1)*(dilation_exponential**j-1)/(dilation_exponential-1))

else:

rf_size_j = rf_size_i+j*(kernel_size-1)

#----------------------------------------------------------------------------------------#

## a) Temporal Convolution에 필요한 모듈 추가

self.filter_convs.append(dilated_inception(residual_channels, conv_channels, dilation_factor=new_dilation))

self.gate_convs.append(dilated_inception(residual_channels, conv_channels, dilation_factor=new_dilation))

self.residual_convs.append(nn.Conv2d(in_channels=conv_channels,

out_channels=residual_channels,

kernel_size=(1, 1)))

if self.seq_length>self.receptive_field:

self.skip_convs.append(nn.Conv2d(in_channels=conv_channels,

out_channels=skip_channels,

kernel_size=(1, self.seq_length-rf_size_j+1)))

else:

self.skip_convs.append(nn.Conv2d(in_channels=conv_channels,

out_channels=skip_channels,

kernel_size=(1, self.receptive_field-rf_size_j+1)))

#----------------------------------------------------------------------------------------#

## b) Graph Convolution에 필요한 모듈 추가

if self.gcn_true:

self.gconv1.append(mixprop(conv_channels, residual_channels, gcn_depth, dropout, propalpha))

self.gconv2.append(mixprop(conv_channels, residual_channels, gcn_depth, dropout, propalpha))

if self.seq_length>self.receptive_field:

self.norm.append(LayerNorm((residual_channels, num_nodes, self.seq_length - rf_size_j + 1),elementwise_affine=layer_norm_affline))

else:

self.norm.append(LayerNorm((residual_channels, num_nodes, self.receptive_field - rf_size_j + 1),elementwise_affine=layer_norm_affline))

#----------------------------------------------------------------------------------------#

new_dilation *= dilation_exponential

#==========================================================================================#

# [ 5. Final Convolution ]

self.end_conv_1 = nn.Conv2d(in_channels=skip_channels,

out_channels=end_channels,

kernel_size=(1,1),

bias=True)

self.end_conv_2 = nn.Conv2d(in_channels=end_channels,

out_channels=out_dim,

kernel_size=(1,1),

bias=True)

#==========================================================================================#

# [ 6. Skipconnection 전/후의 NN ]

## skip0 : 시작할 때

## skipE : 끝날 때

if self.seq_length > self.receptive_field:

self.skip0 = nn.Conv2d(in_channels=in_dim, out_channels=skip_channels, kernel_size=(1, self.seq_length), bias=True)

self.skipE = nn.Conv2d(in_channels=residual_channels, out_channels=skip_channels, kernel_size=(1, self.seq_length-self.receptive_field+1), bias=True)

else:

self.skip0 = nn.Conv2d(in_channels=in_dim, out_channels=skip_channels, kernel_size=(1, self.receptive_field), bias=True)

self.skipE = nn.Conv2d(in_channels=residual_channels, out_channels=skip_channels, kernel_size=(1, 1), bias=True)

def forward(self, input, idx=None):

seq_len = input.size(3)

assert seq_len==self.seq_length, 'input sequence length not equal to preset sequence length'

# 길이 안맞을 경우, padding

if self.seq_length<self.receptive_field:

input = nn.functional.pad(input,(self.receptive_field-self.seq_length,0,0,0))

#-----------------------------------------------------------------------------#

# [ 1. Graph Learning Module ]

# (1) adp = adapative adjacency matrix ()

if self.gcn_true:

if self.buildA_true:

if idx is None:

adp = self.gc(self.idx)

else:

adp = self.gc(idx)

else:

adp = self.predefined_A

#-----------------------------------------------------------------------------#

# [ 2. GCN & 3. TCN의 교대 반복 ]

x = self.start_conv(input)

skip = self.skip0(F.dropout(input, self.dropout, training=self.training))

for i in range(self.layers):

residual = x

filter = self.filter_convs[i](x)

filter = torch.tanh(filter)

gate = self.gate_convs[i](x)

gate = torch.sigmoid(gate)

x = filter * gate

x = F.dropout(x, self.dropout, training=self.training)

#------skipconnection에 추가할 부분--------#

s = x

s = self.skip_convs[i](s)

skip = s + skip

#--------------------------------------#

if self.gcn_true:

x = self.gconv1[i](x, adp)+self.gconv2[i](x, adp.transpose(1,0))

else:

x = self.residual_convs[i](x)

x = x + residual[:, :, :, -x.size(3):]

if idx is None:

x = self.norm[i](x,self.idx)

else:

x = self.norm[i](x,idx)

skip = self.skipE(x) + skip

x = F.relu(skip)

x = F.relu(self.end_conv_1(x))

x = self.end_conv_2(x)

return x

nconv: matrix multiplication

class nconv(nn.Module):

def __init__(self):

super(nconv,self).__init__()

def forward(self,x, A):

x = torch.einsum('ncwl,vw->ncvl',(x,A))

return x.contiguous()

dy_nconv: matrix multiplication ( 미분용 )

class dy_nconv(nn.Module):

def __init__(self):

super(dy_nconv,self).__init__()

def forward(self,x, A):

x = torch.einsum('ncvl,nvwl->ncwl',(x,A))

return x.contiguous()

linear: 1x1 convolution

class linear(nn.Module):

def __init__(self,c_in,c_out,bias=True):

super(linear,self).__init__()

self.mlp = torch.nn.Conv2d(c_in, c_out,

kernel_size=(1, 1),

padding=(0,0),

stride=(1,1), bias=bias)

def forward(self,x):

return self.mlp(x)

mixprop : Mix-hop Propagation

- \(\mathbf{H}^{(k)}=\beta \mathbf{H}_{i n}+(1-\beta) \tilde{\mathbf{A}} \mathbf{H}^{(k-1)}\).

class mixprop(nn.Module):

def __init__(self,c_in,c_out,gdep,dropout,alpha):

super(mixprop, self).__init__()

self.nconv = nconv()

self.mlp = linear((gdep+1)*c_in,c_out)

self.gdep = gdep

self.dropout = dropout

self.alpha = alpha

def forward(self,x,adj):

#-------------------------------------------------------------------#

adj = adj + torch.eye(adj.size(0)).to(x.device) # self loop 더하기

d = adj.sum(1) # rowsum ( 즉, degree )

adj = adj / d.view(-1, 1) # normalize

#-------------------------------------------------------------------#

h = x # h = 1st hidden vector

out = [h] # 중간 결과물들 저장

for i in range(self.gdep):

h = self.alpha*x + (1-self.alpha)*self.nconv(h,adj)

out.append(h)

ho = torch.cat(out,dim=1) # 결과물들 합치기

ho = self.mlp(ho)

#-------------------------------------------------------------------#

return ho

dy_mixprop : Mix-hop Propagation ( 미분 버전 )

class dy_mixprop(nn.Module):

def __init__(self,c_in,c_out,gdep,dropout,alpha):

super(dy_mixprop, self).__init__()

self.nconv = dy_nconv()

self.mlp1 = linear((gdep+1)*c_in,c_out)

self.mlp2 = linear((gdep+1)*c_in,c_out)

self.gdep = gdep

self.dropout = dropout

self.alpha = alpha

self.lin1 = linear(c_in,c_in)

self.lin2 = linear(c_in,c_in)

def forward(self,x):

#adj = adj + torch.eye(adj.size(0)).to(x.device)

#d = adj.sum(1)

x1 = torch.tanh(self.lin1(x))

x2 = torch.tanh(self.lin2(x))

adj = self.nconv(x1.transpose(2,1),x2)

adj0 = torch.softmax(adj, dim=2)

adj1 = torch.softmax(adj.transpose(2,1), dim=2)

h = x

out = [h]

for i in range(self.gdep):

h = self.alpha*x + (1-self.alpha)*self.nconv(h,adj0)

out.append(h)

ho = torch.cat(out,dim=1)

ho1 = self.mlp1(ho)

h = x

out = [h]

for i in range(self.gdep):

h = self.alpha * x + (1 - self.alpha) * self.nconv(h, adj1)

out.append(h)

ho = torch.cat(out, dim=1)

ho2 = self.mlp2(ho)

return ho1+ho2

dilated_1D : 1개의 dilated 1D conv

- 다양한 크기의 kernel로 생성한 뒤 합칠 것!

class dilated_1D(nn.Module):

def __init__(self, cin, cout, dilation_factor=2):

super(dilated_1D, self).__init__()

self.tconv = nn.ModuleList()

self.kernel_set = [2,3,6,7]

self.tconv = nn.Conv2d(cin,cout,

kernel_size=(1,7),

dilation=(1,dilation_factor))

def forward(self,input):

x = self.tconv(input)

return x

dilated_inception : 4가지 커널 size를 가지고 dilated inception 모듈 생성

- 각각의 결과들을 전부 concatenate한다

class dilated_inception(nn.Module):

def __init__(self, cin, cout, dilation_factor=2):

super(dilated_inception, self).__init__()

self.tconv = nn.ModuleList()

self.kernel_set = [2,3,6,7]

cout = int(cout/len(self.kernel_set))

for kern in self.kernel_set:

self.tconv.append(nn.Conv2d(cin,cout,(1,kern),dilation=(1,dilation_factor)))

def forward(self,input):

x = []

for i in range(len(self.kernel_set)):

x.append(self.tconv[i](input))

for i in range(len(self.kernel_set)):

x[i] = x[i][...,-x[-1].size(3):]

x = torch.cat(x,dim=1)

return x

graph_constructor : graph learning을 위한 모듈

\(\begin{aligned} &\mathbf{M}_{1}=\tanh \left(\alpha \mathbf{E}_{1} \boldsymbol{\Theta}_{1}\right) \\ &\mathbf{M}_{2}=\tanh \left(\alpha \mathbf{E}_{2} \boldsymbol{\Theta}_{2}\right) \\ &\mathbf{A}=\operatorname{ReLU}\left(\tanh \left(\alpha\left(\mathbf{M}_{1} \mathbf{M}_{2}^{T}-\mathbf{M}_{2} \mathbf{M}_{1}^{T}\right)\right)\right) \\ &\text { for } i=1,2, \cdots, N \\ &\mathbf{i d x}=\operatorname{argtopk}(\mathbf{A}[i,:]) \\ &\mathbf{A}[i,-\mathbf{i d x}]=0 \end{aligned}\).

class graph_constructor(nn.Module):

def __init__(self, nnodes, k, dim, device, alpha=3, static_feat=None):

super(graph_constructor, self).__init__()

self.nnodes = nnodes # number of node

self.device = device

self.k = k # 상위 k개 ( k+1등부터는 연결 끊음 (=0) )

self.dim = dim # embedding 차원

self.alpha = alpha

self.static_feat = static_feat

#-------------------------------------------------------#

# ( prior로 static feature (O) 경우 )

if static_feat is not None:

xd = static_feat.shape[1]

self.lin1 = nn.Linear(xd, dim)

self.lin2 = nn.Linear(xd, dim)

# ( prior로 static feature (X) 경우 )

else:

self.emb1 = nn.Embedding(nnodes, dim)

self.emb2 = nn.Embedding(nnodes, dim)

self.lin1 = nn.Linear(dim,dim)

self.lin2 = nn.Linear(dim,dim)

#-------------------------------------------------------#

def forward(self, idx):

#-------------------------------------------------------#

# static feature (O) 경우

if self.static_feat is None:

M1 = self.emb1(idx)

M2 = self.emb2(idx)

# static feature (X) 경우

else:

M1 = self.static_feat[idx,:]

M2 = M1

#-------------------------------------------------------#

# Adjacency matrix 계산

M1 = torch.tanh(self.alpha*self.lin1(M1))

M2 = torch.tanh(self.alpha*self.lin2(M2))

A_temp = torch.mm(M1, M2.transpose(1,0))-torch.mm(M2, M1.transpose(1,0))

A = F.relu(torch.tanh(self.alpha*A_temp))

#-------------------------------------------------------#

# Top K에 못드는거 masking

mask = torch.zeros(idx.size(0), idx.size(0)).to(self.device)

mask.fill_(float('0'))

s1,t1 = (A + torch.rand_like(A)*0.01).topk(self.k,1)

mask.scatter_(1,t1,s1.fill_(1))

#-------------------------------------------------------#

# 최종 Adjacency matrix

A = A*mask

return A

def fullA(self, idx): # masking 없는 부분

if self.static_feat is None:

M1 = self.emb1(idx)

M2 = self.emb2(idx)

else:

M1 = self.static_feat[idx,:]

M2 = M1

#-------------------------------------------------------#

# Adjacency matrix 계산

M1 = torch.tanh(self.alpha*self.lin1(M1))

M2 = torch.tanh(self.alpha*self.lin2(M2))

A_temp = torch.mm(M1, M2.transpose(1,0))-torch.mm(M2, M1.transpose(1,0))

A = F.relu(torch.tanh(self.alpha*A_temp))

return A

LayerNorm : Layer Normalization

class LayerNorm(nn.Module):

__constants__ = ['normalized_shape', 'weight', 'bias', 'eps', 'elementwise_affine']

def __init__(self, normalized_shape, eps=1e-5, elementwise_affine=True):

super(LayerNorm, self).__init__()

if isinstance(normalized_shape, numbers.Integral):

normalized_shape = (normalized_shape,)

self.normalized_shape = tuple(normalized_shape)

self.eps = eps

self.elementwise_affine = elementwise_affine

if self.elementwise_affine:

self.weight = nn.Parameter(torch.Tensor(*normalized_shape))

self.bias = nn.Parameter(torch.Tensor(*normalized_shape))

else:

self.register_parameter('weight', None)

self.register_parameter('bias', None)

self.reset_parameters()

def reset_parameters(self):

if self.elementwise_affine:

init.ones_(self.weight)

init.zeros_(self.bias)

def forward(self, input, idx):

if self.elementwise_affine:

return F.layer_norm(input, tuple(input.shape[1:]), self.weight[:,idx,:], self.bias[:,idx,:], self.eps)

else:

return F.layer_norm(input, tuple(input.shape[1:]), self.weight, self.bias, self.eps)

def extra_repr(self):

return '{normalized_shape}, eps={eps}, ' \

'elementwise_affine={elementwise_affine}'.format(**self.__dict__)

trainer.py

class Trainer():

def __init__(self, model, lrate, wdecay, clip, step_size, seq_out_len, scaler, device, cl=True):

self.scaler = scaler

self.model = model

self.model.to(device)

self.optimizer = optim.Adam(self.model.parameters(), lr=lrate, weight_decay=wdecay)

self.loss = util.masked_mae

self.clip = clip

self.step = step_size

self.iter = 1

self.task_level = 1

self.seq_out_len = seq_out_len

self.cl = cl

def train(self, input, real_val, idx=None):

self.model.train()

self.optimizer.zero_grad()

output = self.model(input, idx=idx)

output = output.transpose(1,3)

real = torch.unsqueeze(real_val,dim=1)

predict = self.scaler.inverse_transform(output)

if self.iter%self.step==0 and self.task_level<=self.seq_out_len:

self.task_level +=1

if self.cl:

loss = self.loss(predict[:, :, :, :self.task_level], real[:, :, :, :self.task_level], 0.0)

else:

loss = self.loss(predict, real, 0.0)

loss.backward()

if self.clip is not None:

torch.nn.utils.clip_grad_norm_(self.model.parameters(), self.clip)

self.optimizer.step()

# mae = util.masked_mae(predict,real,0.0).item()

mape = util.masked_mape(predict,real,0.0).item()

rmse = util.masked_rmse(predict,real,0.0).item()

self.iter += 1

return loss.item(),mape,rmse

def eval(self, input, real_val):

self.model.eval()

output = self.model(input)

output = output.transpose(1,3)

real = torch.unsqueeze(real_val,dim=1)

predict = self.scaler.inverse_transform(output)

loss = self.loss(predict, real, 0.0)

mape = util.masked_mape(predict,real,0.0).item()

rmse = util.masked_rmse(predict,real,0.0).item()

return loss.item(),mape,rmse

class Optim(object):

def _makeOptimizer(self):

if self.method == 'sgd':

self.optimizer = optim.SGD(self.params, lr=self.lr, weight_decay=self.lr_decay)

elif self.method == 'adagrad':

self.optimizer = optim.Adagrad(self.params, lr=self.lr, weight_decay=self.lr_decay)

elif self.method == 'adadelta':

self.optimizer = optim.Adadelta(self.params, lr=self.lr, weight_decay=self.lr_decay)

elif self.method == 'adam':

self.optimizer = optim.Adam(self.params, lr=self.lr, weight_decay=self.lr_decay)

else:

raise RuntimeError("Invalid optim method: " + self.method)

def __init__(self, params, method, lr, clip, lr_decay=1, start_decay_at=None):

self.params = params # careful: params may be a generator

self.last_ppl = None

self.lr = lr

self.clip = clip

self.method = method

self.lr_decay = lr_decay

self.start_decay_at = start_decay_at

self.start_decay = False

self._makeOptimizer()

def step(self):

grad_norm = 0

if self.clip is not None:

torch.nn.utils.clip_grad_norm_(self.params, self.clip)

self.optimizer.step()

return grad_norm

# decay learning rate if val perf does not improve or we hit the start_decay_at limit

def updateLearningRate(self, ppl, epoch):

if self.start_decay_at is not None and epoch >= self.start_decay_at:

self.start_decay = True

if self.last_ppl is not None and ppl > self.last_ppl:

self.start_decay = True

if self.start_decay:

self.lr = self.lr * self.lr_decay

print("Decaying learning rate to %g" % self.lr)

#only decay for one epoch

self.start_decay = False

self.last_ppl = ppl

self._makeOptimizer()

util.py

normal_std (x)

- x의 standard deviation 구하기

def normal_std(x):

return x.std() * np.sqrt((len(x) - 1.) / (len(x)))

StandardScaler

class StandardScaler():

def __init__(self, mean, std):

self.mean = mean

self.std = std

def transform(self, data):

return (data - self.mean) / self.std

def inverse_transform(self, data):

return (data * self.std) + self.mean

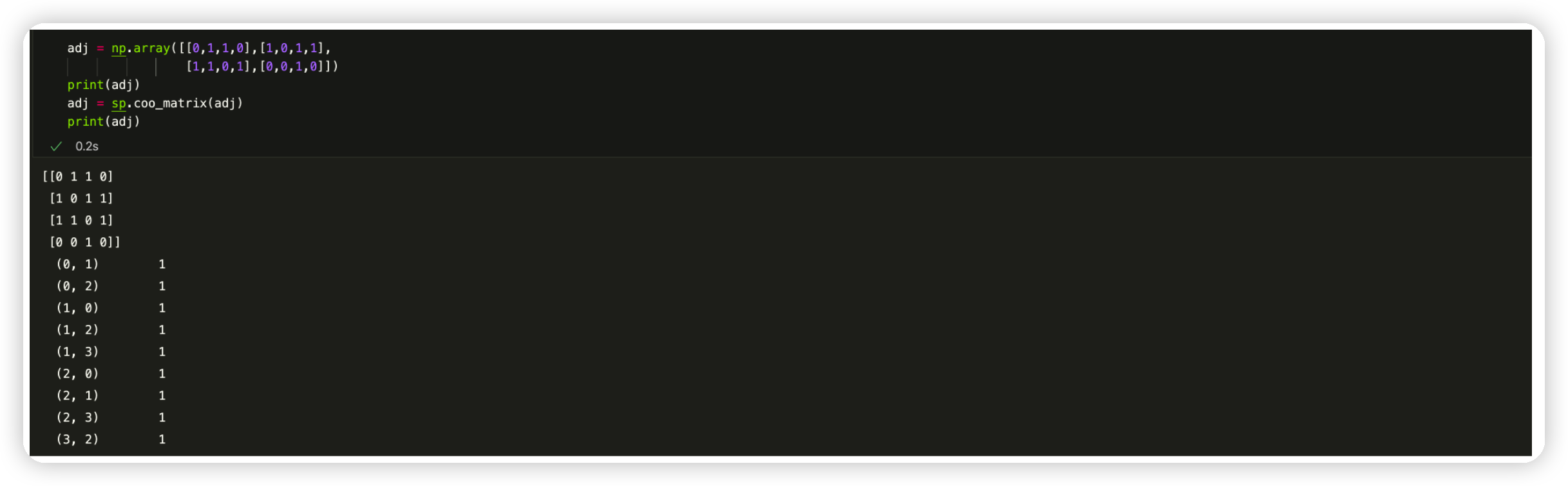

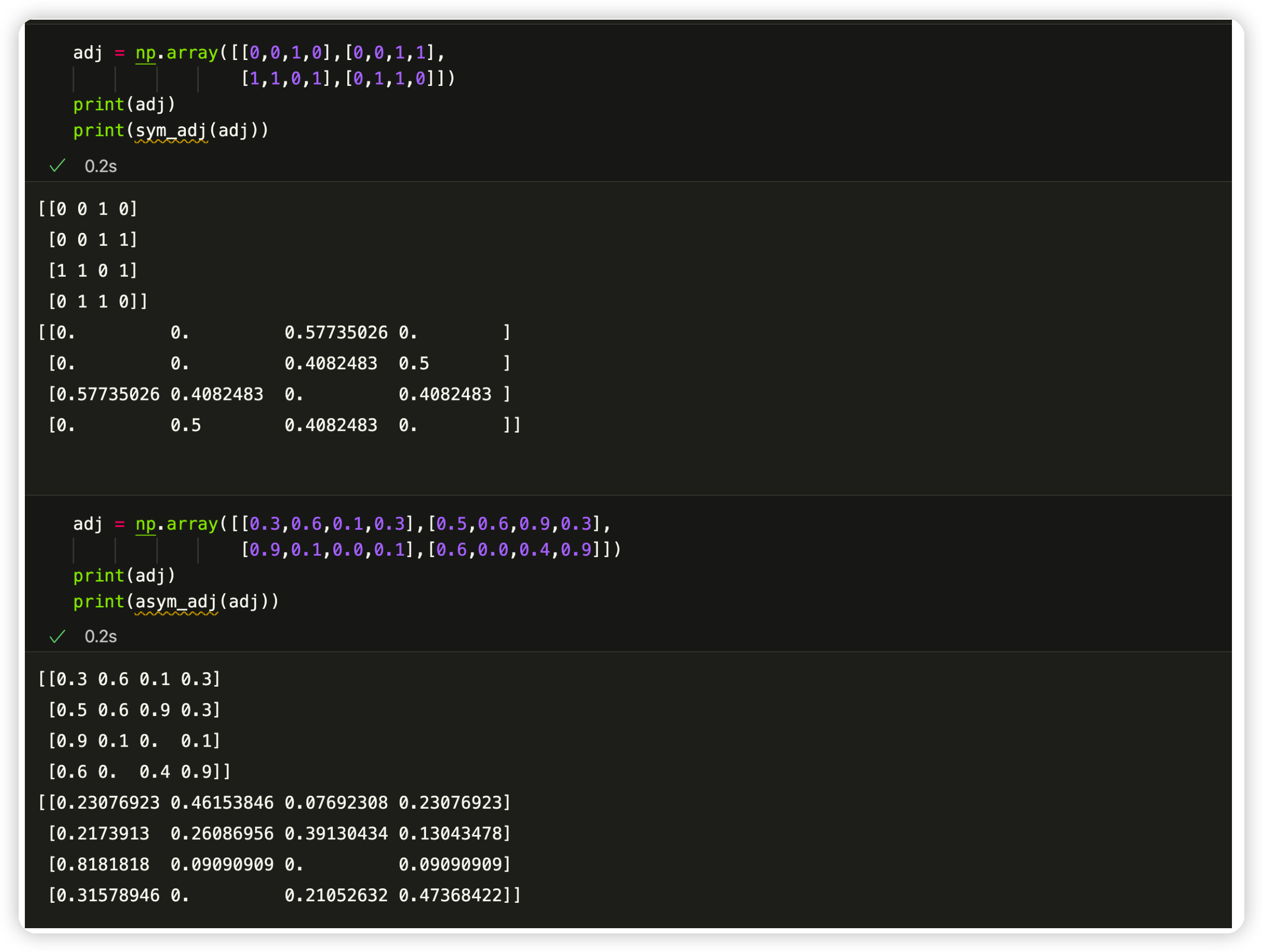

sym_adj&asym_adj

import scipy.sparse as sp

def sym_adj(adj):

"""Symmetrically normalize adjacency matrix."""

adj = sp.coo_matrix(adj)

rowsum = np.array(adj.sum(1))

d_inv_sqrt = np.power(rowsum, -0.5).flatten()

d_inv_sqrt[np.isinf(d_inv_sqrt)] = 0.

d_mat_inv_sqrt = sp.diags(d_inv_sqrt)

return adj.dot(d_mat_inv_sqrt).transpose().dot(d_mat_inv_sqrt).astype(np.float32).todense()

def asym_adj(adj):

"""Asymmetrically normalize adjacency matrix."""

adj = sp.coo_matrix(adj)

rowsum = np.array(adj.sum(1)).flatten()

d_inv = np.power(rowsum, -1).flatten()

d_inv[np.isinf(d_inv)] = 0.

d_mat= sp.diags(d_inv)

return d_mat.dot(adj).astype(np.float32).todense()

Normalized Laplacian Matrix

- https://thejb.ai/comprehensive-gnns-3/ 참조

def calculate_normalized_laplacian(adj):

adj = sp.coo_matrix(adj) # (1) adjacency matrix

d = np.array(adj.sum(1)) # (2) degree 계산하기

d_inv_sqrt = np.power(d, -0.5).flatten()

d_inv_sqrt[np.isinf(d_inv_sqrt)] = 0.

d_mat_inv_sqrt = sp.diags(d_inv_sqrt)

normalized_laplacian = sp.eye(adj.shape[0]) - adj.dot(d_mat_inv_sqrt).transpose().dot(d_mat_inv_sqrt).tocoo()

return normalized_laplacian

각종 파일들 load 하기

def load_pickle(pickle_file):

try:

with open(pickle_file, 'rb') as f:

pickle_data = pickle.load(f)

except UnicodeDecodeError as e:

with open(pickle_file, 'rb') as f:

pickle_data = pickle.load(f, encoding='latin1')

except Exception as e:

print('Unable to load data ', pickle_file, ':', e)

raise

return pickle_data

def load_adj(pkl_filename):

sensor_ids, sensor_id_to_ind, adj = load_pickle(pkl_filename)

return adj

def load_dataset(dataset_dir, batch_size, valid_batch_size= None, test_batch_size=None):

data = {}

for category in ['train', 'val', 'test']:

cat_data = np.load(os.path.join(dataset_dir, category + '.npz'))

data['x_' + category] = cat_data['x']

data['y_' + category] = cat_data['y']

scaler = StandardScaler(mean=data['x_train'][..., 0].mean(), std=data['x_train'][..., 0].std())

# Data format

for category in ['train', 'val', 'test']:

data['x_' + category][..., 0] = scaler.transform(data['x_' + category][..., 0])

data['train_loader'] = DataLoaderM(data['x_train'], data['y_train'], batch_size)

data['val_loader'] = DataLoaderM(data['x_val'], data['y_val'], valid_batch_size)

data['test_loader'] = DataLoaderM(data['x_test'], data['y_test'], test_batch_size)

data['scaler'] = scaler

return data

def load_node_feature(path):

fi = open(path)

x = []

for li in fi:

li = li.strip()

li = li.split(",")

e = [float(t) for t in li[1:]]

x.append(e)

x = np.array(x)

mean = np.mean(x,axis=0)

std = np.std(x,axis=0)

z = torch.tensor((x-mean)/std,dtype=torch.float)

return z

평가지표 (metric)

def metric(pred, real):

mae = masked_mae(pred,real,0.0).item()

mape = masked_mape(pred,real,0.0).item()

rmse = masked_rmse(pred,real,0.0).item()

return mae,mape,rmse

def masked_mse(preds, labels, null_val=np.nan):

if np.isnan(null_val):

mask = ~torch.isnan(labels)

else:

mask = (labels!=null_val)

mask = mask.float()

mask /= torch.mean((mask))

mask = torch.where(torch.isnan(mask), torch.zeros_like(mask), mask)

loss = (preds-labels)**2

loss = loss * mask

loss = torch.where(torch.isnan(loss), torch.zeros_like(loss), loss)

return torch.mean(loss)

def masked_rmse(preds, labels, null_val=np.nan):

return torch.sqrt(masked_mse(preds=preds, labels=labels, null_val=null_val))

def masked_mae(preds, labels, null_val=np.nan):

if np.isnan(null_val):

mask = ~torch.isnan(labels)

else:

mask = (labels!=null_val)

mask = mask.float()

mask /= torch.mean((mask))

mask = torch.where(torch.isnan(mask), torch.zeros_like(mask), mask)

loss = torch.abs(preds-labels)

loss = loss * mask

loss = torch.where(torch.isnan(loss), torch.zeros_like(loss), loss)

return torch.mean(loss)

def masked_mape(preds, labels, null_val=np.nan):

if np.isnan(null_val):

mask = ~torch.isnan(labels)

else:

mask = (labels!=null_val)

mask = mask.float()

mask /= torch.mean((mask))

mask = torch.where(torch.isnan(mask), torch.zeros_like(mask), mask)

loss = torch.abs(preds-labels)/labels

loss = loss * mask

loss = torch.where(torch.isnan(loss), torch.zeros_like(loss), loss)

return torch.mean(loss)

class DataLoaderS(object):

# train and valid is the ratio of training set and validation set. test = 1 - train - valid

def __init__(self, file_name, train, valid, device, horizon, window, normalize=2):

self.P = window

self.h = horizon

fin = open(file_name)

self.rawdat = np.loadtxt(fin, delimiter=',')

self.dat = np.zeros(self.rawdat.shape)

self.n, self.m = self.dat.shape

self.normalize = 2

self.scale = np.ones(self.m)

self._normalized(normalize)

self._split(int(train * self.n), int((train + valid) * self.n), self.n)

self.scale = torch.from_numpy(self.scale).float()

tmp = self.test[1] * self.scale.expand(self.test[1].size(0), self.m)

self.scale = self.scale.to(device)

self.scale = Variable(self.scale)

self.rse = normal_std(tmp)

self.rae = torch.mean(torch.abs(tmp - torch.mean(tmp)))

self.device = device

def _normalized(self, normalize):

if (normalize == 0):

self.dat = self.rawdat

if (normalize == 1): # 전체 max

self.dat = self.rawdat / np.max(self.rawdat)

if (normalize == 2): # row별 max ( 각 시계열 별 max )

for i in range(self.m):

self.scale[i] = np.max(np.abs(self.rawdat[:, i]))

self.dat[:, i] = self.rawdat[:, i] / np.max(np.abs(self.rawdat[:, i]))

def _split(self, train, valid, test):

train_set = range(self.P + self.h - 1, train)

valid_set = range(train, valid)

test_set = range(valid, self.n)

self.train = self._batchify(train_set, self.h)

self.valid = self._batchify(valid_set, self.h)

self.test = self._batchify(test_set, self.h)

def _batchify(self, idx_set, horizon):

n = len(idx_set)

X = torch.zeros((n, self.P, self.m))

Y = torch.zeros((n, self.m))

for i in range(n):

end = idx_set[i] - self.h + 1

start = end - self.P

X[i, :, :] = torch.from_numpy(self.dat[start:end, :])

Y[i, :] = torch.from_numpy(self.dat[idx_set[i], :])

return [X, Y]

def get_batches(self, inputs, targets, batch_size, shuffle=True):

length = len(inputs)

if shuffle:

index = torch.randperm(length)

else:

index = torch.LongTensor(range(length))

start_idx = 0

while (start_idx < length):

end_idx = min(length, start_idx + batch_size)

excerpt = index[start_idx:end_idx]

X = inputs[excerpt]

Y = targets[excerpt]

X = X.to(self.device)

Y = Y.to(self.device)

yield Variable(X), Variable(Y)

start_idx += batch_size