21. Reading Wikipedia to Answer Open-Domain Questions (2017)

목차

- Abstract

- Introduction

- Our System : DrQA

- Document Retriever

- Document Reader

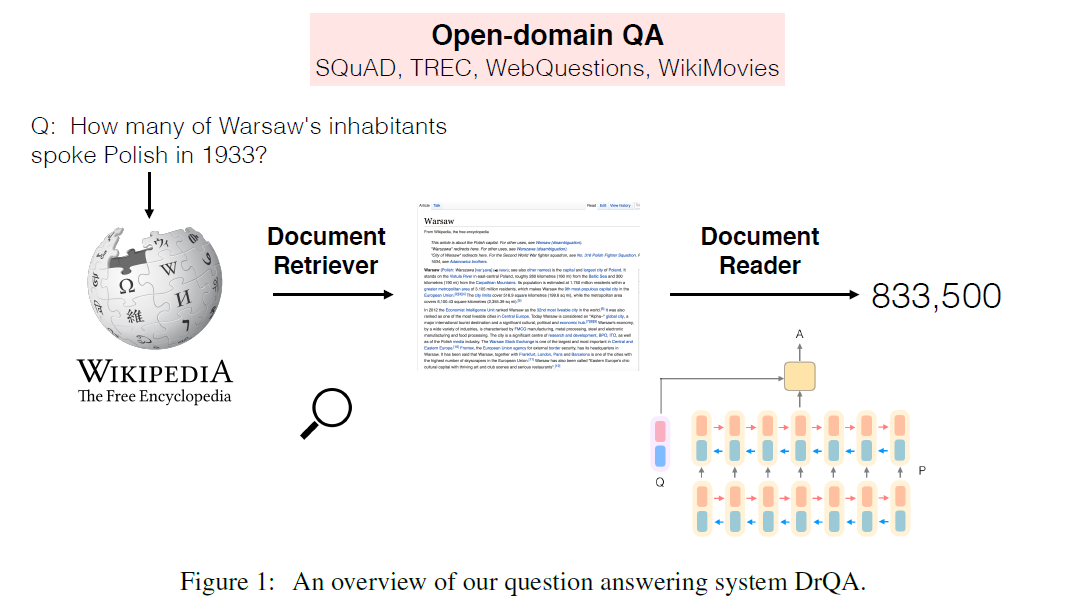

Abstract

-

tackle open-domain QA using Wikipedia as unique knowledge source!

-

combines (1) & (2)

- (1) a search component based on bigram hashing and TF-IDF matching

- (2) multi-layer RNN to detect answers in paragraph

1. Introduction

Wikipedia

- constantly evolving source of detailed information

-

but designed for humans, NOT MACHINES!

-

problem)

- challenges of both large-scale open domain QA

- challenges of machine comprehension of text

ex) in order to answer question…

-

step 1) should retrieve the few relevant articles among more than 5 million items

-

step 2) scan them carefully to find answer

\(\rightarrow\) not good at MRS(Machine Reading at Scale)

Shows how multiple existing QA datasets can be used to evaluate MRS by requiring an open-domain system to perform well on all of them at once!

Develop DrQA

- (1) Document Retriever

- use bigram hashing & TF-IDF matching

- to return a subset of relevant articles, given a question

- (2) Document Reader

- RNN machine comprehension model

- detect answer spans inside few returned documents

2. Our System : DrQA

(1) Document Retriever

(2) Document Reader

2-1. Document Retriever

use an efficient document retrieval system

- narrow down the search space!

compare question & articles

- TF-IDF weighted bag-of-words vectors

- then, improve by taking local word order into account with n-gram ( bi-gram worked best )

Return 5 candidate documents!

2-2. Document Reader

use Neural Network

notation :

- question : \(q\) ( of \(l\) tokens) : \(\{q_1,...,q_l\}\)

- \(n\) paragraphs

- single paragraph \(p\) consists of \(m\) tokens \(\{p_1,...,p_m\}\)

(1) Paragraph Encoding

- represent all tokens \(p_i\) ( in paragraph \(p\) ) as a sequence of feature vectors \(\tilde{\mathbf{p}}_{i} \in \mathbb{R}^{d}\)

- then, pass them to RNN

- model : biLSTM

- \(\left\{\mathbf{p}_{1}, \ldots, \mathbf{p}_{m}\right\}=\operatorname{RNN}\left(\left\{\tilde{\mathbf{p}}_{1}, \ldots, \tilde{\mathbf{p}}_{m}\right\}\right)\).

- Feature vector \(\tilde{\mathbf{p}}_{i}\) is comprised of ….

- 1) word embeddings :

- \[f_{e m b}\left(p_{i}\right)=\mathbf{E}\left(p_{i}\right)\]

- 300-dim Glove word

- 2) exact match

- \[f_{\text {exact_match }}\left(p_{i}\right)=\mathbb{I}\left(p_{i} \in q\right)\]

- 3) Token features

- \(f_{\text {token }}\left(p_{i}\right)=\left(\operatorname{POS}\left(p_{i}\right), \operatorname{NER}\left(p_{i}\right), \operatorname{TF}\left(p_{i}\right)\right)\).

- 4) Aligned question embeddings

- \(f_{\text {align }}\left(p_{i}\right)=\sum_{j} a_{i, j} .\mathbf{E}\left(q_{j}\right)\), where the attention score \(a_{i, j}\)

- 1) word embeddings :

(2) Question Encoding

-

much simpler! apply another RNN on top of word embeddings of \(q_i\)

-

\(\mathrm{q}=\sum_{j} b_{j} \mathrm{q}_{j}\),

where \(b_{j}\) encodes the importance of each question word …. \(b_{j}=\frac{\exp \left(\mathbf{w} \cdot \mathbf{q}_{j}\right)}{\sum_{j^{\prime}} \exp \left(\mathbf{w} \cdot \mathbf{q}_{j^{\prime}}\right)}\).

(3) Prediction

-

at a paragraph level, goal is to predict the span of tokens that is most likely the correct answer

-

\(\begin{aligned} P_{\text {start }}(i) & \propto \exp \left(\mathbf{p}_{i} \mathbf{W}_{s} \mathbf{q}\right) \\ P_{\text {end }}(i) & \propto \exp \left(\mathbf{p}_{i} \mathbf{W}_{e} \mathbf{q}\right) \end{aligned}\).

-

during prediction, choose the best span from token \(i\) ~ token \(i'\) ,

such that \(i \geq i' \geq i+15\) & \(P_\text{start}(i) \times P_\text{end}(i')\) is maximized