[Paper Review] 19. GAN Dissection : Visualizing and Understanding GANs

Contents

- Abstract

- Introduction

- Method

- Characterizing units by Dissection

- Measuring Causal Relationships using Intervention

0. Abstract

present an analytic framework to visualize & understand GANs at

- unit / object / scene level

Step 1 ) identify a group of interpretable units

- that are closely related to object concepts,

- using a segmentation-based network dissection method

Step 2 ) quantify the causal effect of interpretable units

- by measuring the ability of interventions to control objects in the output

1. Introduction

General method for visualizing & understanding GANs

- at different level of abstractions

- from each neuron / object / contextual relationship

2. Method

-

analyze how objects such as trees are encoded by internal representations of GAN generator

-

notation

- GAN : \(G : \mathbf{z} \rightarrow \mathbf{x}\) …. where \(\mathrm{x} \in \mathbb{R}^{H \times W \times 3}\)

- \(\mathrm{x}=f(\mathbf{r})=f(h(\mathbf{z}))=G(\mathbf{z})\).

-

\(\mathbf{r}\) has necessary data to produce images!

Question

- Whether information about concept \(c\) is in \(\mathbf{r}\)? (X)

- How such information is encoded in \(\mathbf{r}\) ! (O)

-

\(\mathbf{r}_{\mathbb{U}, \mathrm{P}}=\left(\mathbf{r}_{\mathrm{U}, \mathrm{P}}, \mathbf{r}_{\overline{\mathrm{U}}, \mathrm{P}}\right)\).

- generation of object \(c\) at location \(P\) depends mainly on \(\mathbf{r}_{\mathrm{U}, \mathrm{P}}\)

- insensitive to other units \(\mathbf{r}_{\bar{U}, \mathrm{P}}\)

Structure of \(\mathbf{r}\) in 2 phases

-

Dissection

measuring agreement between individual units of \(\mathbf{r}\) & every class \(c\)

-

Intervention

for the represented classes (identified through 1),

identify causal sets of units & measure causal effects

( by forcing sets of units on/off )

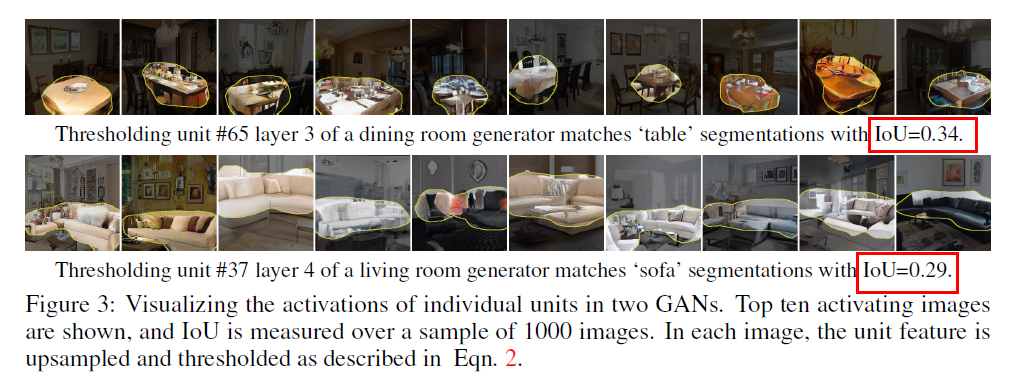

(1) Characterizing units by Dissection

\(\mathbf{r}_{u, \mathbb{P}}\) : one-channel \(h \times w\) feature map of unit \(u\)

\(\rightarrow\) Q) does \(\mathbf{r}_{u, \mathbb{P}}\) encodes semantic class (ex.tree)?

(1) Select a universe of concepts \(c \in \mathbb{C}\), for which we have semantic segmentation \(s_c(x)\) for each class

(2) Then, quantify spatial agreement between

- 1) unit \(u\)’s thresholded feature map

- 2) concept \(c\)’s segmentation

with IOU measure

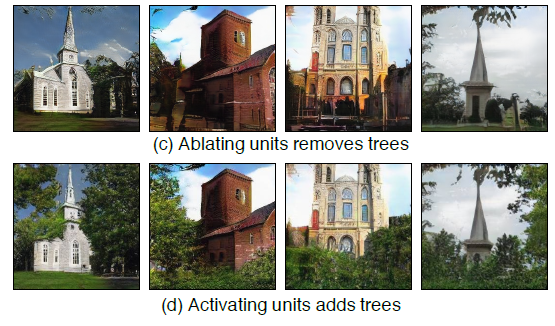

(2) Measuring Causal Relationships using Intervention

test whether a set of units \(U\) in \(\mathbf{r}\) cause the generation of \(c\) !

- via turning on/off the units of \(U\)

Decompose feature map \(\mathbf{r}\) into 2 parts : \(\left(\mathbf{r}_{\mathrm{U}, \mathrm{P}}, \mathrm{r}_{\overline{U, \mathrm{P}}}\right)\)

( where \(\mathrm{r}_{\overline{U, P}}\) : unforced components of \(\mathbf{r}\) )

Original Image :

- \(\mathbf{x}=G(\mathbf{z}) \equiv f(\mathbf{r}) \equiv f\left(\mathbf{r}_{\mathrm{U}, \mathrm{P}}, \mathbf{r}_{\overline{\mathrm{U}, \mathrm{P}}}\right)\).

Image with \(U\) ablated at pixels \(P\) :

- \[\mathbf{x}_{a}=f\left(\mathbf{0}, \mathbf{r}_{\overline{\mathrm{U}, \mathrm{P}}}\right)\]

Image with \(U\) inserted at pixels \(P\) :

- \(\mathbf{x}_{i}=f\left(\mathbf{k}, \mathbf{r}_{\overline{U, \mathrm{P}}}\right)\).

Object is caused by \(U\) if …

- the object appears in \(x_i\)

- the object disappears from \(x_a\)

This causality can be quantified by..

- comparing presence of trees in \(x_i\) & \(x_a\)

- average effects over all locations & images

ACE

- average causal effect (ACE) of units \(\mathrm{U}\) on the generation of on class \(c\)

- \(\delta_{\mathrm{U} \rightarrow c} \equiv \mathbb{E}_{\mathbf{z}, \mathrm{P}}\left[\mathbf{s}_{c}\left(\mathbf{x}_{i}\right)\right]-\mathbb{E}_{\mathbf{z}, \mathrm{P}}\left[\mathbf{s}_{c}\left(\mathbf{x}_{a}\right)\right]\).