S\(^4\)L : Self-Supervised Semi-Supervised Learning

Contents

- Abstract

- Introduction

- Related Work

- Semi-supervised Learning

- Self-supervised Learning

- Methods

- Self-supervised Semi-supervised Learning

- Semi-supervised Baselines

0. Abstract

propose the framework of \(S^4L\) ( = Self-Supervised Semi-Supervised Learning )

\(\rightarrow\) use it do derive 2 novel semi-supervised image classification methods

1. Introduction

-

Hypthoesize that self-supervised learning techniques could dramatically benefit from a small amount of labeled examples

-

Bridge self-supervised & semi-supervised learning

2. Related Work

(1) Semi-supervised Learning

-

use both labeled & unlabeled datasets

-

standard for evaluating semi-supervised algorithms :

- (1) start with LABELED dataset

- (2) keep only portion of labels

- (3) treat the rest as UNLABELED

-

add consistency regularization losses

- on the unlabeled data

-

measure the discrepancy between predictions made on perturbed unlabeled data points

- result :

- by minimizing this loss, models implicitly push the decision boundary away from high-density parts of the unlabeled data

2 additional approaches for semi-supervised laerning

- (1) Pseudo-Labeling

- imputes approximate classes on unlabeled data

- model = trained by ONLY LABLED dataset

- (2) conditional entropy minimization

- UNLABELED data : encourgaged to make confident predictions on some class

(2) Self-supervised Learning

- various pretext ( surrogate ) tasks

- Use only unsupervised data

3. Methods

focus on semi-supervised image classification problem

Notation

- assume an (unknown) data distn : \(p(X, Y)\)

- labeled traning set : \(D_l\) ……. sampled from \(p(X, Y)\)

- unlabeled traning set : \(D_u\) ……. sampled from \(\)p(X)

Objective Function :

- \(\min _\theta \mathcal{L}_l\left(D_l, \theta\right)+w \mathcal{L}_u\left(D_u, \theta\right)\).

(1) Self-supervised Semi-supervised Learning

2 prominent self-supervised techniques :

- (1) predicting image rotation

- (2) exemplar

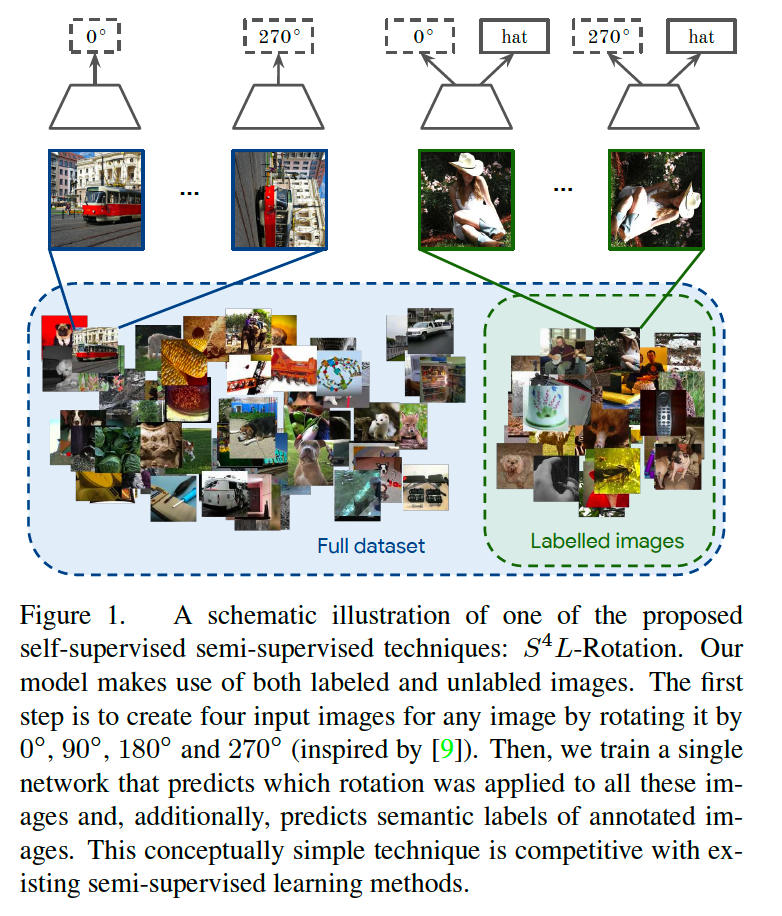

a) \(S^4\) L-Rotation

rotation degree : (0,90,180,270) \(\rightarrow\) 4-class classification

( also apply it to LABELED datasets )

Loss function : \(\mathcal{L}_{r o t}=\frac{1}{ \mid \mathcal{R} \mid } \sum_{r \in \mathcal{R}} \sum_{x \in D_u} \mathcal{L}\left(f_\theta\left(x^r\right), r\right)\).

b) \(S^4\) L-Exemplar

Cropping … produce 8 different instances of each images

implement \(\mathcal{L}_u\) as the batch hard triplet loss with a soft margin

- applied to all 8 instances of each image

(2) Semi-supervised Baselines

a) Virtual Adversarial Traning (VAT)

-

idea ) making the predicted labels ROBUST around input data point against local perturbation

-

VAT loss for model \(f_{\theta}\) :

-

\[\mathcal{L}_{\mathrm{vat}}=\frac{1}{ \mid \mathcal{D}_u \mid } \sum_{x \in \mathcal{D}_u} \mathrm{KL}\left(f_\theta(x) \mid \mid f_\theta(x+\Delta x)\right)\]

where \(\Delta x=\arg \max \operatorname{KL}\left(f_\theta(x) \mid \mid f_\theta(x+\delta)\right)\)

-

\[\mathcal{L}_{\mathrm{vat}}=\frac{1}{ \mid \mathcal{D}_u \mid } \sum_{x \in \mathcal{D}_u} \mathrm{KL}\left(f_\theta(x) \mid \mid f_\theta(x+\Delta x)\right)\]

b) Conditional Entropy Minimization (EntMin)

-

assumption ) unlabeled data indeed has one of the classes that we are training on

-

adds a loss for unlabeled data that, when minimized,

\(\rightarrow\) Encourages the model to make CONFIDENT predictions on unlabeled datasets

-

\(\mathcal{L}_{\text {entmin }}=\frac{1}{ \mid \mathcal{D}_u \mid } \sum_{x \in \mathcal{D}_u} \sum_{y \in Y}-f_\theta(y \mid x) \log f_\theta(y \mid x)\).

\(\rightarrow\) consider both loss …. \(\mathcal{L}_u=w_{v a t} \mathcal{L}_{\mathrm{vat}}+w_{\text {entmin }} \mathcal{L}_{\text {entmin }}\)

c) Pseudo-Label

1) Train model only on LABELED data

-

then, make predictions on UNLABELED data

-

predictions, whose confidence is above certain threshold

\(\rightarrow\) add it to training data

-

retrain the model