[ CS224W - Colab 0 ]

( 참고 : CS224W: Machine Learning with Graphs )

import networkx as nx

1. Graph

Directed & Undirected graph

G_undirected = nx.Graph()

G_directed = nx.DiGraph()

Graph Level attribute

G_undirected.graph['graph_attr1']="A"

2. Node

Add node ( + Node Level attribute )

G = nx.Graph()

num_nodes=10

node_attr1s = [1,3,5,7,9,11,13,15,17,19]

node_attr2s = [10,20,30,40,50,60,70,80,90,100]

for idx in range(num_nodes):

G.add_node(idx,

attr1=node_attr1s[idx],

attr2=node_attr2s[idx])

Add multiple nodes

G.add_nodes_from([

(10, {"attr1": 21, "attr2": 110}),

(11, {"attr1": 23, "attr2": 120})

])

Get node attributes

node_0_attr = G.nodes[0]

print(node_0_attr)

# Node 0 has the attributes {'attr1': 1, 'attr2': 10}

Print all nodes

for node in G.nodes(data=True):

print(node)

for node in G.nodes():

print(node)

(0, {'attr1': 1, 'attr2': 10})

(1, {'attr1': 3, 'attr2': 20})

(2, {'attr1': 5, 'attr2': 30})

....

0

1

2

...

Number of nodes :

num_nodes = G.number_of_nodes()

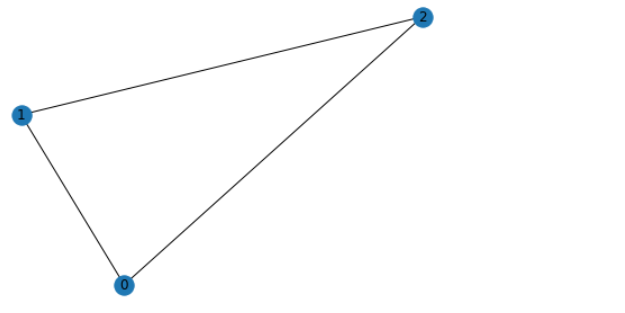

3. Edge

Add edge

G.add_edge(0, 1, weight=0.5)

Add multiple edges

G.add_edges_from([

(1, 2, {"weight": 0.3}),

(2, 0, {"weight": 0.1})

])

Print all edges

for edge in G.edges():

print(edge)

(0, 1)

(0, 2)

(1, 2)

Get edge attributes

print(G.edges[(0, 1)])

# Edge (0, 1) has the attributes {'weight': 0.5}

Number of edges :

num_edges = G.number_of_edges()

4. Visualization

nx.draw(G, with_labels = True)

5. Degree & Neighbors

node_id=2

# degree

G.degree[node_id])

# neighbors

G.neighbors(node_id)

6. Pagerank

Pagerank of nodes

num_nodes = 4

G = nx.DiGraph(nx.path_graph(num_nodes))

pr = nx.pagerank(G, alpha=0.8)

7. Dataset

ex) KarateClub

from torch_geometric.datasets import KarateClub

dataset = KarateClub()

describe dataset

len(dataset) # 1 graph

dataset.num_features # 34 features

dataset.num_classes # 4 classes

get one graph ( + node & edges )

G1 = dataset[0]

num_nodes = G1.num_nodes

num_edges = G1.num_edges

Average node degrees

avg_degree = (2*num_nodes) / num_edges

check other properties

G1.has_isolated_nodes()

G1.is_undirected()

G1.has_self_loops()

get edge indices

G1.edge_index.T

8. GNN with pytorch

making graphs with torch_geometric

from torch_geometric.utils import to_networkx

G = to_networkx(data, to_undirected=True)

Import packages

import torch

from torch.nn import Linear

from torch_geometric.nn import GCNConv

GNN model with Pytorch

class GCN(torch.nn.Module):

def __init__(self,num_classes,input_dim,embed_dim,hidden_dim,num_layers):

super(GCN, self).__init__()

torch.manual_seed(12345)

# 0) attributes

self.num_classes = num_classes

self.input_dim = input_dim

self.hidden_dim = hidden_dim

self.embed_dim = embed_dim

self.num_layers = num_layers

# 1) classifier

self.classifier = Linear(embed_dim,self.num_classes)

# 2) graph convolution layers

self.convs = torch.nn.ModuleList()

self.convs.append(GCNConv(self.input_dim, self.hidden_dim))

for l in range(self.num_layers-2):

self.convs.append(GCNConv(self.hidden_dim, self.hidden_dim))

self.convs_final = GCNConv(self.hidden_dim,embed_dim)

# 3) activation function

self.relu = torch.nn.ReLU()

def forward(self, x, edge_idx):

# 1) pass convolution layers

for layer_idx in range(self.num_layers-1):

x = self.convs[layer_idx](x, edge_idx)

x = x.tanh()

# 2) pass final convolution layer & make embedding

h = torch.nn.functional.relu(x)

h = torch.nn.Dropout(p=0.2)(h)

h = self.convs_final(h, edge_index)

embeddings = h.tanh() # Final GNN embedding space.

# 3) pass final classifier

out = self.classifier(embeddings)

return out, embeddings

Hyperparameters

input_dim = dataset.num_features

hidden_dim = 16

embed_dim = 2

num_classes = dataset.num_classes

num_layers = 3

GNN model

model = GCN(num_classes,input_dim,embed_dim,hidden_dim,num_layers)

print(model)

GCN(

(classifier): Linear(in_features=2, out_features=4, bias=True)

(convs): ModuleList(

(0): GCNConv(34, 16)

(1): GCNConv(16, 16)

)

(convs_final): GCNConv(16, 2)

(relu): ReLU()

)

model = GCN(num_classes,input_dim,embed_dim,hidden_dim,num_layers)

_, h = model(data.x, data.edge_index)

print(f'Embedding shape: {list(h.shape)}')

# Embedding shape: [34, 2]

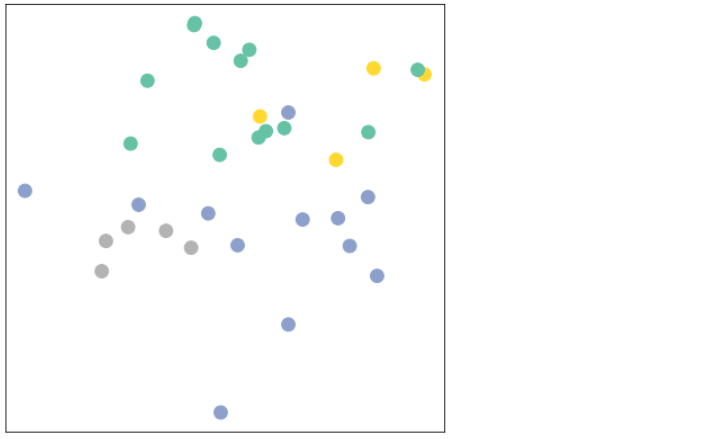

visualize(h, color=data.y)

Train Model

- Model / Loss Function / Optimizer

model = GCN(num_classes,input_dim,embed_dim,hidden_dim,num_layers)

loss_fn = torch.nn.CrossEntropyLoss()

opt = torch.optim.Adam(model.parameters(), lr=0.01)

- Training Function

def train(data):

train_idx = data.train_mask

opt.zero_grad()

# Feed Forward

y_hat, h = model(data.x, data.edge_index)

loss = loss_fn(y_hat[train_idx], data.y[train_idx])

loss.backward()

opt.step()

# Prediction

accuracy = {}

## train data

y_pred = torch.argmax(y_hat[train_idx], axis=1)

y_true = data.y[data.train_idx]

accuracy['train'] = torch.mean(torch.where(y_pred == y_true, 1, 0).float())

## whole data

y_pred_total = torch.argmax(y_hat, axis=1)

y_true_total = data.y

accuracy['val'] = torch.mean(torch.where(y_pred_total == y_true_total, 1, 0).float())

return loss, h, accuracy

- Train Model & Visualize

num_epochs = 300

print_epoch = 10

for epoch in range(num_epochs):

loss, h, accuracy = train(data)

if epoch % print_epoch == 0:

visualize(h, color=data.y, epoch=epoch, loss=loss, accuracy=accuracy)

time.sleep(0.3)